- Trending:

- Pope Leo Xiv

- |

- Israel

- |

- Trump

- |

- Social Justice

- |

- Peace

- |

- Love

Explore The Catholic Community

Featured Catholic Voices

About Patheos

Catholic Channel

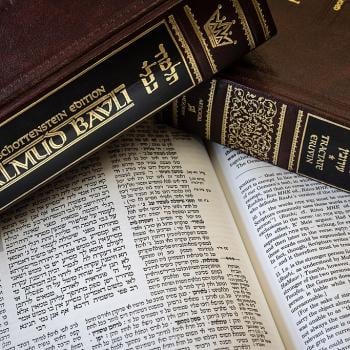

Roman Catholicism is a worldwide religious tradition of some 1.1 billion members. It traces its history to Jesus of Nazareth, an itinerant preacher in the area around Jerusalem during the period of Roman occupation, in the early 30s of the Common Era. Its members congregate in a communion of churches headed by bishops, whose role originated with the disciples of Jesus. Explore Catholic perspectives and opinions on the religion, its history, culture, and more.

Explore Catholic Topics