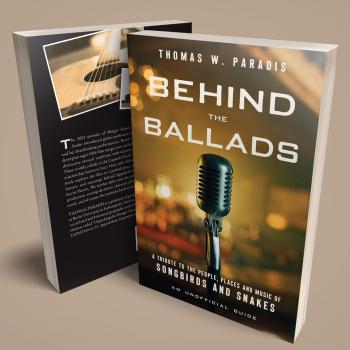

An article that my colleague Ankur Gupta and I wrote together, exploring the intersection of science fiction, artificial intelligence, wisdom, ethics, and religion, has appeared in a special issue of the journal Religions. The special issue is titled “So Say We All: Religion and Society in Science Fiction” and our article has the title “Writing a Moral Code: Algorithms for Ethical Reasoning by Humans and Machines.” Here’s the abstract:

The moral and ethical challenges of living in community pertain not only to the intersection of human beings one with another, but also our interactions with our machine creations. This article explores the philosophical and theological framework for reasoning and decision-making through the lens of science fiction, religion, and artificial intelligence (both real and imagined). In comparing the programming of autonomous machines with human ethical deliberation, we discover that both depend on a concrete ordering of priorities derived from a clearly defined value system.

Visit the journal’s website to read the whole thing online or download the article in pdf format.

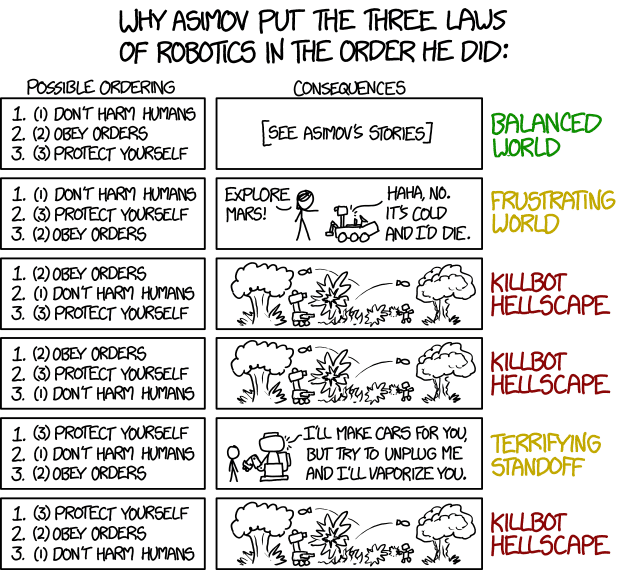

If the article seems too long to keep your interest, the XKCD cartoon below about Asimov’s Three Laws of Robotics sums up one crucial point that we explore. But I really do hope that the cartoon will whet your appetite to want to see what we do with it, rather than seeming acceptable as a substitute for reading it!

The journal, being open access online, makes articles available as soon as they are through the peer review and editing processes. Ours was the first through that process, and I’m eagerly looking forward to reading what the other contributions turn out to be!

Elsewhere online, you can explore some recent articles related to at least some aspects of these intersections, if not always quite as many of the threads as Ankur and I sought to incorporate:

An NSF grant to explore the ethics of driverless cars

Only a Game on self-braking cars (that’s the blog of the author of The Virtuous Cyborg, where you’ll find more on this subject)

Saving ignorance from AI in Nautilus

New Humanist on AI and common sense

Vox and IO9 on woke droids and more from the Star Wars universe

David Brin on science fiction scenarios

Catholic thinkers on the ethics of artificial intelligence

Gillian Whitaker shared the authors contributing to a collection themed around AI and robots

https://geekdomhouse.com/android-soup-for-the-soul-how-robots-model-humanity/

http://speculativefaith.lorehaven.com/imago-hominis/

In the European Union, the decision was made recently to recognize robots as persons – in the same sense that corporations can be persons before the law.

https://scienceandbelief.org/2018/07/19/summer-special-could-a-robot-ever-have-a-real-human-identity/

Lots from (or via) 3 quarks daily:

Automating Inequality: How High Tech Tools Profile, Police, and Punish the Poor

Artificial Intelligence Is Infiltrating Medicine — But Is It Ethical?

Will humans ever conquer mortality by merging with technology?

To Build Truly Intelligent Machines, Teach Them Cause and Effect

It’s Time for Technology to Serve all Humankind with Unconditional Basic Income

How a Pioneer of Machine Learning Became One of Its Sharpest Critics

The New Yorker on how frightened we should be of AI

https://www.thetechedvocate.org/5-great-ted-talks-on-the-potential-of-artificial-intelligence/

A couple from Steve Wiggins:

New York Times article on whether there is a “smarter path” to AI

https://religiousstudiesproject.com/2018/06/05/religious-studies-opportunities-digest-5-june-2018/

https://relcfp.tumblr.com/post/174156653006/boston-university-graduate-student-conference

Finally, let me link to a classic article by Robert Geraci about AI.