In the first decade of the 21st century, an unsexy, inoffensive software markup language called XML solved a problem that has been the predicate for all major historical transformations: extracting content from its conventional forms and repurposing this content in ways never before imagined. XML became the vehicle for liberating data – as digital representations of the stuff of the material world – from established publishing formats. With almost no one paying attention, new possibilities for endlessly reproducing, recombining, and remapping data emerged early in the second decade of the 21st century. This “Big Data” revolution heralded and enabled the profound advances in artificial intelligence and machine learning of which we are only now becoming fully aware.

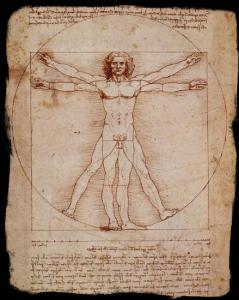

The advent of Big Data, artificial intelligence, and machine learning have rapidly encroached upon (inhabited, invaded, colonized, body-snatched, pick your verb) the biological and social foundations of individual human identity – the language, reason, judgment, and desire of the embodied, conscious self. Data collection and extraction has in short order hollowed out and inverted – metaphysically if not literally – the physical forms and landscapes through which we have always, as a species, mapped our reality. Within several more decades, it now seems clear that digital data and artificial intelligence will reconstitute and reformat what it means to be human.

The forward-looking science of complexity has quickly grasped the historical significance of this Big Data revolution (see, for instance, the work of theoretical physicist, Geoffrey West, of the Santa Fe Institute, on the “scaling singularity“). However, perspectives on the implications of this great transformation remain generally undeveloped within popular discourse, and remain especially elusive for Catholic, Creator-Centric Christians of the “Natural Law” persuasion for whom ancient scriptures provide their epistemological fundament, the mote in their eye that will not yield. The goal of this article is to surface and illuminate the underlying dynamics of this great inversion and consider its implications for the backward-looking and increasingly irrelevant human nature pieties and truisms of the Abrahamic religions.

The Form-Content Paradox

In 1998, I was working as the editorial director at a small technology startup called Partes Corporation that offered free access to the SEC’s EDGAR corporate filings. One morning, the company’s president invited me to join him for a meeting with a Microsoft executive about a new markup language called XML, which meant “eXtensible Markup Language.” Offspring of a clunky professional publishing language called SGML, XML was essentially a flat-file database, a nerdy way to organize, store, publish, query, and analyze digital content separately from its HTML file formats.

I was familiar with HTML, sufficiently fluent that I had become a liability to my own company from my impulse (ignoring all engineering and publishing protocols) to upload HTML content to our website whenever the spirit moved me. But in our meeting at Microsoft, in what was one of the early business conversations about XML, I could only with difficulty wrap my head around what it would mean to separate “content” – anything describing or identifying what we might call the substance or “thingness” of the world – from its HTML formats. In my mind, “form” and “content” were essentially the same thing.

Hylomorphism is the term of art for philosophical considerations of form-content relationships dating back to Aristotle and refined by medieval scholastics such as Thomas Aquinas. Hylomorphism is also a word prominently used by computer scientists to characterize recursive properties of Hadoop and other Big Data processing and analytics software platforms used for machine learning and artificial intelligence. In 1998, however, my frame of reference for hylomorphism was the daily newspaper.

At that time, for me and for most people, the content of a daily newspaper derived its attributes and essence from the format in which I engaged it – the folded, ink-perfumed newsprint – and indeed required this format to exist in any meaningful sense as information. This content – the facts, the narrative – these were the ingredients baked into the newspaper “cake.” Once baked, I could not imagine retrieving these ingredients, from this cake, for use in any other baking project.

The first decade of the Web did not meaningfully alter my perspective on the integrated relationship in publishing between container and content. Prior to the development and implementation of XML as a markup language, HTML documents published to the Internet and viewed on web browsers were the only way to engage most online content, Despite superficial differences in presentation, with scrolling and hyperlinks, the experience of reading most web content did not differ enormously from the old-school, analog experience of reading a book or newspaper.

Of Palm Pilots and Snakes

By 2000, I had moved on to an XML startup with a ton of venture capital money called Nimble Technology. I can still recall our CEO, Suzan DelBene (now the congresswoman representing Washington state’s 1st congressional district), pitching to employees an imagined XML user scenario.

An explorer in the Amazon (the Brazilian Amazon, not the Seattle Amazon), sees a large snake sidewinding its way toward him and snaps a photo with his Palm Pilot. The photo flies across the Internet to Nimble servers. The servers instantly map the picture to the correct snake species and return to our intrepid explorer essential data about the snake, including the properties and effects of its venom and the best ways to treat its bite.

Nimble was ahead of its time, of course (perhaps too nimble). Fallout from Y2K, the dot.com bubble-burst, 9/11, war in Iraq, and the 2008 global financial meltdown, along with specific overselling of XML itself (a technology not inherently sexy to begin with), shrank interest in data markup languages to the vanishing point. But the infrastructure surrounding XML continued to develop and spread.

Library of Babel

In 2002, I founded my own company, a small online legal news and data platform called Knowledge Mosaic that focused on aggregating, integrating, and serving to customers information (mostly unstructured corporate regulatory and disclosure documents) previously only accessible in a printed format. My company benefited from the options XML gave us to flexibly and imaginatively repurpose and reformat document data for our legal customers.

From a somewhat precarious position on the margins of the digital data business, I directly experienced in this first decade of the 21st century what otherwise escaped public notice – the enormous progress of technologies supporting digital storage, processing, memory, and networking bandwidth and connectivity. All of which allowed cloud computing to emerge as a default location for massive amounts of data that existed independently of any specific format.

Since 2000, what XML (and its successor languages) has quietly built upon this infrastructure is a basis for virtualizing the world. XML has given us a translation method, via tags, for describing the world, that has almost no limitations. HTML had been simply another presentation format, a digital version of a printed page, a fixed experience. XML tagging allowed for the possibility of taxonomizing everything, flexibly, portably, instantly, and with endless recombinant reproducibility. Perhaps not unlike the Library of Babel about which Jose Luis Borges wrote, only with emergent possibilities.

The Web API: Big Data’s Force Multiplier

But how would this descriptive taxonomizing actually occur? The digital age’s force multiplier has been the proliferation of the XML-powered “Web API” – the application programming interface built for distributed or decentralized interactions with centralized web platforms such as Google Maps, Wikipedia, Netflix, and Spotify. Web API’s gave these web platforms capacity to connect, leverage, customize, and augment the foundational data and the services they support. Since 2005, the number of publicly available Web API’s has grown from 105 to more than 21,000. As was the case for XML itself, the technical foundations of the Web API were lightweight and rather trivial, even if the emerging cloud infrastructure it leveraged was not. But in short order, XML and the API changed how we would engage and experience the world.

One of the coolest and most ubiquitous Web API implementations leveraging XML has been Google Maps. XML provided the underlying data foundations for Google Maps, and continues to this day to support its extensibility and customization features – a huge dimension of the digital data revolution one could not imagine in traditional analog publishing environments. Google Maps, like most other successful XML implementations, relies upon Web APIs to support collaborative or crowdsourced customization of the core application.

The Google Maps API platform has both spawned and leveraged amazing geospatial mapping innovations based on the sharing and exchange of location-specific data – built structures, geographic and geologic features, demographic attributes, moments in time.

Google Maps support for data layers has opened new opportunities to integrate different types of data by overlaying them on the same location grid, creating possibilities, not simply to virtualize three-dimensional space, but to attach endless numbers of other data “dimensions” to this space, creating a vertical landscape that in some instances approximates holography. The Google Maps data layers create an n-dimensional, data-rich mapping “landscape,” which includes location-tagged traffic and transit data, photos, weather information, and Wikipedia articles. More than three million websites used the Google Maps API, making it the most ubiquitous Web API globally.

The Thin Slice: Data’s Digital Inversion

Weirdly, cloud computing leader Amazon has for years used trucks to transport hard drives with business data to its cloud computing data centers, the forward-looking use of an obviously inadequate – if not immediately anachronistic – delivery mechanism to secure the company’s first-mover advantage (not unlike Nimble Technology’s somewhat unlikely “Palm Pilot” scenario). But the algae-bloom explosion of global data after 2010 speaks to the relatively frictionless ease with which most businesses (and other entities public and private) constructed a “connected” digital landscape that has both inverted and subverted the material landscapes from which we humans have constructed our maps of reality.

Consider the rapidity and scale of this digital data inversion – its “hockey stick” profluence – and then ponder its implications for how we understand and live in the world. Data now floods our planet oceanically, with consequences – for data science, data storage, data networks, processing technologies, artificial intelligence, social media, smartphones, robotics, automation, the Internet of things, autonomous vehicles and weapons, and the surveillance state – that no one could have imagined two decades ago.

Nearly every profession and discipline has become data-dependent, if not data-overwhelmed, each now vying to become its own avatar for a “thin-slicing” paradigm (itself inverting Malcolm Gladwell’s conventional “blink” conception of thin-slicing) that sucks unfathomable quantities of layered information about our material world (pretty much anything that can be measured, with much of it also imaged) into its maw.

Let’s consider four instances of this new kind of thin-slicing in professional settings: 1) sports analytics; 2) medical imaging informatics; 3) full-spectrum astronomy; and 4) computational design.

1) Sports Analytics

Data has devoured sports. Michael Lewis published Moneyball in 2003. Since 2006, the MIT Sloan Sports Analytics Conference has grown from 100 to nearly 4,000 attendees. The Conference was also the venue for the unveiling of MLB Statcast, a platform meshing 3D doppler radar and high definition video to emulate human stereoscopic vision and capture every movement, distance, and angle on the playing field.

Statcast generates terabytes of raw signals (20,000 per second) for every major league baseball game; introduces precise measurements for (at least) 30 new pitching, hitting, baserunning, and fielding indices; and has turbocharged the invention of arcane yet precise metrics (e.g., wOBA and wRC ) to assess player and team performance. Statcast spawned an analytics ecosystem that almost instantly obliterated the timeless baseball box score we associate with our late-lamented newsprint format, along with traditional methods of scouting, coaching, managing, and paying players.

Houston Rockets General Manager Daryl Morey, an MIT graduate, was one of the founders of the Sloan Sports Analytic Conference, and his data-driven vision has transformed basketball no less completely than Billy Beane’s predictive analytics genius changed baseball. Since 2009, the NBA has packaged video tracking, video indexing, and statistical modeling technologies to quantify precise ball trajectory, player location and player movement data to the millisecond. As with baseball, this data has within a span of only a few years, entirely changed how the game is played.

The incredible detail of NBA data maps also has created the opportunity (if not the imperative) to “thin-slice” basketball. The NBA Advanced Stats Glossary defines 280 metrics captured by its tracking platform, including such unlikely data points as the number of times a player has a rebound chance, but defers the rebound to a teammate (we’re looking at you, Steven Adams) or the percentage of an offensive player’s overall possessions while guarded by a specific defensive player.

2) Medical Imaging Informatics

The volume of health care data doubles nearly every 18 months, with rivers of technology-driven diagnostic imaging and genomic information accounting for a vast percentage of this growth (the radiology team at the Mayo Clinic in Jacksonville produced an average of 1,500 images daily in 1994, 16,000 images daily in 2002, and more than 80,000 images daily in 2006, with only God knows how many images burying its radiologists in 2019).

As one doctor noted, “MRI image creation is driving storage requirements significantly. The trend is more image with thinner slices and 3D capability. We’ve gone from 2,000 images to over 20,000 for an MRI of a human head, and stronger magnets and higher resolution pictures means more data stored.”

Meanwhile, the EU’s Human Brain Project deli-slices preserved human brains into 7,000 horizontal layers, with each slice then scanned to a depth resolution of 1 micrometer (or 500 times the resolution of the most powerful clinical MRI scans) and reassembled into a 3D atlas of the brain. The 7,000 brain slices amount to 2 petabytes of data (equivalent to all data stored in US academic research libraries). Storage for each brain slice requires the equivalent of a 256 GB hard drive.

3) Full-Spectrum Astronomy

Likewise, the light sensitivity and spectrum breadth of telescopes has increased exponentially. The Hubble Space Telescope, designed in the 1970s, transmits about 20 GB of data each week. The Atacama Large Millimeter Array of 66 radio telescopes in Chile, completed in 2011, will eventually log 14 TB of data to its archives each week, a pipeline of signals approximately 700 times greater than Hubble, while the Large Synoptic Survey optical telescope under construction in Chile is expected to double the signal inflow of Atacama. The ambitious (and controversial) Square Kilometer Array radio telescope, if it is ever built, illustrates the outrageous potential of a full-spectrum, widely dispersed radio array, with an estimated inflow of 70 petabytes of compressed signal data each week, 2,500 times the amount of data currently harvested by the world’s most powerful telescopes.

4) Computational Design

In a 2016 CityLab post sussing out complexities of the relationship between data and cities, the inestimable Richard Florida returns us to the n-dimensional descriptive granularity of geolocation data mashups. Geolocation research leverages data and Web APIs from platforms such as Google Maps, Yelp, Foursquare, Twitter, Flickr, Zillow, and Uber. These massive datasets now inform remarkably detailed studies of urban environments, including analyses of the relationship between race and gentrification; neighborhood conflict and ethnicity; the economic structure and characteristics of cities; and the demographics of consumption, health care and mobility (not to mention the remarkably inventive mappings of the late-lamented Floating Sheep cartography collective).

Not surprisingly, architecture and design firms have more proactively advanced a data-driven “smart cities” vision. Google spinoff Sidewalk Labs, for example, assumes a future for cities with design feedback loops based on incredibly specific location-based and movement-based sensor grids embedded within the built environment.

International architecture firms such as Kohn Pedersen Fox have assembled computational design prototyping labs, modeled on software industry “rapid development” principles. This “generative design” methodology relies upon data science and machine learning to power a design space that can cycle through tens of thousands of design iterations based on thousands of different data inputs. One can see how the KPF lab, and computational design methods, generally, depend on both a layered workflow and data-intensive thin-slicing design analysis routine.

Content Liberation and the Arc of History

XML, and its successor markup and tagging languages, have made possible new ways to liberate (describe, extract, separate) content (semantic and otherwise) from its analog and digital forms, allowing for limitless exchange, recombination, reuse, and reconceptualization of this content, a kind of virtual Big Bang.

Other human-readable and machine-readable languages for structuring and exchanging data have since largely displaced XML, especially in cloud-sourced Big Data applications. However, the early adoption and influence of XML helps us to confront new dimensions of the complex historical and philosophical relationship between content and form, between the substance of things and the shapes they take.

XML ushered in the virtual world that now both mimes and shapes our reality. Its impact also represents a paradigmatic instance of the process by which technology revolutions can bend the arc of history. The Big Data revolution is the output of a technology innovation equivalent to – and sharing elements of – other innovations implicated in pivotal moments of human history.

Examples include: the moldboard plow (~11th century, for three-field crop rotation); the movable-type printing press (15th century, for mechanical reproduction of printed materials), the square-rigged ocean sailing carrack or galleon (15th and 16th centuries, for trans-oceanic trade, exploration, and conquest), the external and internal combustion heat engine (18th and 19th centuries, for mining, manufacturing, shipping, and transportation), and the electro-magnetic particle accelerator (20th century, for cracking open the mysteries of the nanosphere). With Archimedean violence, these historically pivotal innovations collided with the forms of the world and split them apart, liberating the “ingredients” or substances trapped. These innovations “unbaked the cake.”

We can profitably compare the psychological impacts of the data revolution of the early 21st century to the interior emotional and cognitive consequences of the printing revolution that originated in the early 15th century. During this era of “mechanical reproduction” (about which Walter Benjamin has famously written), book production increased from about 20 million volumes in the 15th century to more than 1 billion by the 18th century, with unfiltered access to information creating new dramas of personal subjectivity associated with epistemic confusion and social anarchy. Sound familiar? Now compress that growth from 400 years to 20 years.

Or compare the disruptive economic and labor force consequences of the data revolution of the early 21st century to the cataclysmic consequences for labor of the steam-powered industrial revolution of the early 19th century. EP Thompson’s Making of the English Working Class reminds us of the political layers to these transformations, with innumerable fascinating analogies, such as the Benthamite panopticon (mirroring the modern surveillance state) and development of an “outworker” industry within the textiles trade that deskilled and immiserated workers, (resembling the modern “gig economy”).

From Describing the World to Becoming the World

The impact of format-agnostic data technologies such as XML both resembles and differs from the outcomes of other historically seismic technology innovations. Each of these “Archimedean moments” created entirely new tools kits and entirely transformed perspectives on ourselves (as subjects) and on the world (experienced as the set of objects with which we interact). In this sense, the revolutions in which these innovations participated were not only material, but cognitive and phenomenological.

However, the liberation of digital data differs in other important respects from prior innovations. The emerging landscape of machine learning and artificial intelligence applications requires inputs of non-material, invisible, unfathomably large quantities of electronic information harvested from business, social, and sensor data universes. The data explosion is truly turning both our emotional lives and physical bodies inside-out, with surveillance, tracking, and asymmetrical information landscapes fundamentally reshaping our identities and capacities.

Data-driven ride-sharing services such as Uber and Lyft preview what this world may look like, with new realities determined by information asymmetries and one-way information interactions that allow these companies, via feedback loops, to “game” and condition driver behavior. The enticing language of choice, freedom, and autonomy used by ride-share companies to recruit drivers masks the extent to which these companies algorithmically program and constrain driver choices.

Early in this article, I alluded to the famous story by Jose Luis Borges entitled The Library of Babel, about an imagined library with volumes that encompass the full extent of human knowledge, literally every book that every has and ever might be written. Today, one can visualize a world in which Google Maps (and other “virtualization” technologies) is virtually coextensive with the entire world, with data representing every material object and every interaction across every landscape at every scale. At that point, does Google Maps become the world? Might it even replace the world?

The Divinity of Data

The dream of an “artificial intelligence” rivaling or surpassing the intelligence of humans has long haunted our imaginations. Artificial intelligence research itself has experienced several “AI winters” since the 1970s, where inflated expectations for the technology vastly outpaced the realistic barriers to achieving the goals of a programmable, disembodied intelligence. Indeed, through the first decade of the 21st century, AI research and development efforts labored to surmount market skepticism about whether AI could ever clear philosophical, logical, technical, and applied hurdles to implementing a useful machine intelligence.

In the past decade, a confluence of conceptual and technical breakthroughs in the capacity of machines to “teach themselves” resulted from the use of advanced statistical methods and machine logic (Deep Learning) to analyze unfathomably large and generally unstructured, distributed data repositories (Data Lakes). Renewed attention to artificial intelligence, bordering on frenzy, has plumed from machine learning successes in previously daunting domains such as computer vision, speech recognition, natural language processing, drug discovery, and bioinformatics.

By one definition, Big Data is a field of inquiry and exploration that addresses super-sized datasets (measured by the dimensions of volume, variety, velocity, veracity, and value) that human-scale methods and tools can neither fathom nor manage. The unfathomability of Big Data seems to most matter, particularly as the provenance of the human species – as conscious, individual selves and as decision-making, world-creating communities – shrinks beneath the looming shadow of artificial intelligence.

We locate mystery, fear, confusion – other words for God – in the unfathomable. The micronic scale of subatomic particles and the eternally vast spaces of the universe are beyond our capacity to process. Similarly, data volumes – terabytes, petabytes, exabytes, zettabytes and beyond – have scaled beyond our capacity to process as individuals.

Data has become our new God, our new unmoved mover, and in this sense, it is fully appropriate and meaningful that hylomorphism, which for Aristotle and Aquinas was ultimately about the study of the soul, should in our century become the domain of computer science. Like God, the data is now everywhere. It is behind everything. And with application of artificial intelligence to virtually every dimension of our lives, data may soon “become” everywhere and everything.

The Death of God and the End of Individuality

Going forward, the specific “pivot” we are experiencing will challenge and change our understanding of what it means to be human, to live inside and outside of our bodies, to possess consciousness – the constituents of which include individuality, agency, autonomy, judgment, memory, and desire.

All meaningful technologically driven historical pivots pose these challenges to our self-definition as a species. The arc of time through which they occur creates the political space in which to negotiate the outcomes of these transformations. The current data-driven historical pivot launched with XML and playing out in the reconstruction of our reality in relation to digital data maps has occurred in a compressed time arc, which clearly raises the stakes for the political effort to shape its outcome.

The generational piece of this story most interests me. What percentage of one’s life occurs before and after the temporal “digital divide?” How does this balance between a personal foundation – the embodied self – hatched before and after the advent of the web browser and the smart phone matter? Will any concept of embodied selfhood as we currently and historically understand it, even exist? What will personalities and emotional states of children born into this emergent digital grid look like? Finally, is it possible for children born into the digital age to ever take seriously the concept of the personal God revealed in Abrahamic scripture upon which the foundations of Western religion and “Western civilization” depend?

These are the questions that challenge us as a species in the brave new world of oceanic data incepted by the humble markup language called XML. These are questions Catholics, Christians, and all exponents of Abrahamic religion (who understand the world and their own species through the lens of ancient scriptural revelation) can barely comprehend and for which they have no answers.