Michelle Obama famously called us to be our best selves as citizens: “When someone is cruel or acts like a bully, you don’t stoop to their level. No, our motto is, when they go low, we go high.” But that standard is difficult to maintain in our increasingly Orwellian world of “Alternative Facts.” As guides for such a time as this, Oxford University Press has published an excellent and accessible book related to this topic each of the last two years:

- Denying to the Grave: Why We Ignore the Facts That Will Save Us in 2016 and

- The Death of Expertise: The Campaign Against Established Knowledge and Why it Matters by Thomas M. Nichols in 2017.

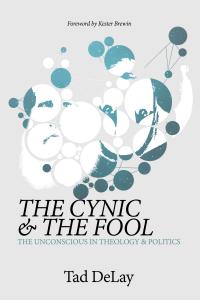

However, as I have reflected on this topic, a third book kept coming to mind: The Cynic & the Fool by Tad DeLay, a scholar who writes at the intersection of psychoanalysis, philosophy, and theology. DeLay’s work is an important reminder that if we can’t agree on the facts, how we proceed will sometimes depend on what is going on underneath our disagreement. To use DeLay’s categories, are the facts in dispute because we are engaged with a “misinformed but honest fool”? Or are we dealing with a nihilistic cynic, who does not care about the truth—only about saying or doing what it takes to spin-doctor perception and amass power at any cost (DeLay 3)?

However, as I have reflected on this topic, a third book kept coming to mind: The Cynic & the Fool by Tad DeLay, a scholar who writes at the intersection of psychoanalysis, philosophy, and theology. DeLay’s work is an important reminder that if we can’t agree on the facts, how we proceed will sometimes depend on what is going on underneath our disagreement. To use DeLay’s categories, are the facts in dispute because we are engaged with a “misinformed but honest fool”? Or are we dealing with a nihilistic cynic, who does not care about the truth—only about saying or doing what it takes to spin-doctor perception and amass power at any cost (DeLay 3)?

I suspect we have all watched enough political interviews to have wondered to ourselves periodically whether a given politician “is so foolish as to actually believe what he just said or, instead, is just [cynically] towing a party line he knows is false” (DeLay 3). Along these lines, the late conservative political commentator William F. Buckley (1925-2008) was famous for saying, “I will not insult your intelligence by treating you as if you are as stupid as you pretend to be” (83).

I wish we lived in a world in which everyone was always acting above board. Unfortunately, we have to pause sometimes and consider whether the person disagreeing with us about the facts is acting in good faith (“with honesty and sincerity of intention”) or bad faith (“with an intent to deceive”). And we know there have been a lot of bad faith actors over time, because we have lots of words to describe this phenomenon: con-man, demagogue, snake-oil salesman, huckster, charlatan, cheat, fraud, sham, swindler. I could go on (DeLay 4).

So when I find myself encountering Orwellian doublespeak about “alternative facts,” I remind myself periodically of the Philip K. Dick line that, “Reality is what doesn’t go away even when you stop believing in it.” There is no such thing as an “alternative fact.” A fact is something that is “indisputably the case.” And there may be consequences for one or more parties, as “reality” catches up with the propaganda either in the short- or long-term. But in the meantime, arguing with someone operating in bad faith can be exhausting at best and deeply harmful at worst.

I am also reminded of the line that I find most haunting from George Orwell’s dystopian novel 1984: “The heresy of heresies was common sense…. The Party told you to reject the evidence of your eyes and ears. It was their final, most essential command.” Anytime the powers that be begin to convince significant numbers of people to disbelieve facts, we are in treacherous times. And whenever people in power are promoting “alternative facts,” concerned citizens should leverage political, ethical, technological and other forms of power to replace them with leaders more likely to act in good faith and in accordance with more-reputable information. Of course, history also shows us that promises made on the campaign trail do not always get fulfilled in office.

But I don’t want to dwell only on the depressing number of bad-faith actors in our world. What about the other side of DeLay’s formulation—the many who are not cynical nihilists, but merely “misinformed but honest fools”? David Dunning and Justin Kruger are two research psychologists at Cornell known for researching this question on the relationship between knowledge and confidence. Their most famous finding is called the “Dunning-Kruger Effect,” which shows that the less you actually know, the more confident you about what you think you know. To quote Dunning and Kruger, under-informed people “not only reach erroneous conclusions and make unfortunate choices, but their incompetence robs them of the ability to realize it” (Nichols 44). Of course, experts get it wrong sometimes too, but if they are good faith actors, they have also spent a lot of time studying common errors and pitfalls in their field. And here’s a related corollary to the “Dunning-Kruger Effect” called the “above average effect”: in almost every area, “everyone thinks they’re…well, above average.” Unfortunately, 50% of us are wrong—and which 50% shifts with the category under consideration.

The truth is that most of us don’t like to be wrong and love to be right. And there is good reason why. Our brains “get a dopamine rush when we find confirming data, similar to the one we get if we eat chocolate, have sex, or fall in love” (135). So evolution has given us a strong incentive to maintain our current view—because it feels more pleasurable to do so—irrespective of whether it is right or wrong. Psychologists call a related effect confirmation bias, “the tendency to search for, interpret, favor, and recall information in a way that confirms one’s pre-existing beliefs or hypotheses.”

In addition to the strong incentives that evolution has given us to maintain our current beliefs, brain scans have shown that our amygdala (our “fight or flight reaction”) is triggered when we encounter points with which we currently disagree. “When we hear a disagreeable idea, the body’s chemical reaction is the same as if someone had pulled a knife on us in a dark alley.” And when the amygdala is activated, brain scans also show a darkening of activity in the rational, prefrontal cortex portion of our brain (DeLay 53). These factors contribute to the all-too-human tendency to persist in believing delusions instead of painfully facing the facts (61).

So, having named some of what we are up against, if you want to increase your odds of changing someone’s mind, here’s a few strategies. First, make sure everyone involved is relaxed and well-rested. If one or more people involved is hungry, angry, stressed, or tired, there is a low likelihood of anyone’s mind being changed.

Second, ask the other person if they would be willing to try the following along with you: “(G)o to a quiet place when you are relatively free from stress or distraction and write down what you know about the arguments on the other side of your belief. Also, write down what it would take for you to change your mind.” This practice can potentially expose one of two things for each of you: (1) that potentially there is nothing that could change one or both of your minds, in which case it may be better to stop talking about the subject at hand, if that is possible—these are what the courts call “irreconcilable differences”, or (2) you may identify the data that would be most likely to convince either or both of you (Gorman 140-141).

A more advanced technique is called “motivational interviewing.” There are lots of fascinating examples we could explore in which people disagree on the facts. We could consider the debates over the Raw Milk Movement (Nichols 22-23), Climate Change, Genetically-Modified Foods (Nichols 230), or Preventing Gun Violence (Gorman 4-5) to name only a few examples. But I would like to invite us to spend just a few minutes reflecting on the debate in our society over vaccines. (I should perhaps sub-title this section: ways to potentially make people incredibly angry at me.)

And I will admit that what it would take for me to change my mind about this debate would be for the scientific consensus to shift, which seems incredibly unlikely. The facts, as I understand them, are that:

The vaccine question was up for debate in 1998 when Andrew Wakefield first published his paper. But 17 years and numerous robust studies later, finding absolutely no [statistically-valid association between autism and vaccines], this is no longer a legitimate debate among respectable and well-informed medical experts and scientists. (Gorman 257)

Moreover, “It was uncovered in 2010 that Wakefield had been accepting money from a defense lawyer to publish certain findings, his paper was retracted, and Wakefield eventually lost his medical license. In addition to obvious conflicts of interest, the paper was scientifically unsound, with only 12 non-randomly selected subjects and no comparison group” (79).

All that being said, here’s an example of what it might look like to experiment with “Motivational Interviewing” techniques around this debate with someone who disagrees with you about the facts:

If a parent tells you that they think vaccines are dangerous and they are thinking of not getting their children vaccinated, the best first thing to say to this statement is not, “That is not true—there is no evidence that vaccines are dangerous,” but rather to gently prod the person into exploring their feelings further by saying something to the effect of “Tell me more” or “How did you come to feel nervous about vaccines?” You can then guide the person through a slowed-down, articulated version of their thought process in getting to the conclusion “Vaccines are dangerous,” and along the way get them to express their main desire, to keep their children healthy….

And that is the turning point of motivational interviewing, finding the deep motivation (i.e., “keeping my children healthy”) beneath the surface fear about vaccines.

After prioritizing deep listening over initially disputing facts, you can eventually ask questions that highlight angles this person may not have considered. So you might ask, “Do you know how many children who are vaccinated are diagnosed with autism within the year?” and “I wonder how many children who are not vaccinated get autism” (170)? For a fair assessment, the full range of statistical possibilities must be compared.

I also want to make sure I directly address the struggle to maintain our deepest values—such as the UU First Principle of “the inherent worth and dignity of every person”—even when we can’t reach agreement on the facts. One of the most helpful books I have found recently on this topic is Cultivating Empathy: The Worth and Dignity of Every Person — Without Exception by my colleague The Rev. Nathan Walker. Nate has written:

I once believed that it was powerful to condemn wrongdoers. I believed it right to tear down another’s unexamined assumptions and to vaporize those whose presence was not worthy of my attention. I believed that others were the cause of my aggression, others were to blame for my feelings of despair, disappointment, and righteous indignation…. I was doing justice…all while being a [jerk]…. (7-8).

For Nate, one of the most powerful tools for cultivating empathy is what he called the moral imagination, “the ability to anticipate or project oneself into the middle of a moral dilemma or conflict and understand all the points of view.” Nate writes that,

It is possible for me to understand another person’s views…without necessarily agreeing with them or silencing my own voice. Understanding is a prerequisite for empathy…. When we observe oppression, let us develop strategies that free not only the oppressed but also the oppressor…. Do not let their unjust actions inspire us to cruelty, or else we will soon become what we set out against….

The Rev. Dr. Carl Gregg is a certified spiritual director, a D.Min. graduate of San Francisco Theological Seminary, and the minister of the Unitarian Universalist Congregation of Frederick, Maryland. Follow him on Facebook (facebook.com/carlgregg) and Twitter (@carlgregg).

Learn more about Unitarian Universalism: http://www.uua.org/beliefs/principles