“It’s never going to go to zero.”

So says Mike Schroepfer, Facebook’s chief technology officer, in a New York Times interview. “It” is the on-going stream of hate, malicious messaging and bad behavior that Facebook’s platform promulgates.

Schroepfer is simply being realistic. But his realism flies in the face of his boss’s belief that the solution to Facebook is more Facebook. Schroepfer’s admission is a coy, but direct, rebuttal of his boss’s idealistic insistence that Facebook can police itself and correct its own problems by deploying more and better AI. Zuckerberg has given this facile answer to every recent scandal. Schroepfer knows better, and says the problems with Facebook bring him to tears.

This public admission of empathy is sincere, I believe, and perhaps it is meant to help offset the general lack of empathy in Zuckerberg’s actions, but empathy needs to reform thinking at Facebook if it is to do any good. This is not to deny the importance of better AI. Of course that needs to play a role in mitigating abuses of the platform. It just won’t make the problems “go to zero.”

Zuckerberg’s idealistic vision for Facebook being all-things-to-all-people is a two-edged sword. It motivates a market-domination strategy, but it lacks realism and thus fails to recognize the depth of the problems.

Still, there is something admirable in Zuckerberg’s idealism. He represents merely the latest, greatest, and most extreme endorsement of the techno-utopianism that inspires tech-biz dreamers in Silicon Valley. Those dreams and dreamers bear witness to the beauty and creativity of the human spirit.

Nicholas Carr refers aptly to the “techno-utopianism” of Silicon Valley. Carr traces the narrative arc which has led to the dominance of software as the vehicle to carry this techno-utopianism forward:

By the turn of the century, Silicon Valley was selling more than gadgets and software. It was selling an ideology. The creed was set in the tradition of American techno-utopianism, but with a digital twist. The Valleyites were fierce materialists—what couldn’t be measured had no meaning—yet they loathed materiality. In their view, the problems of the world, from inefficiency and inequality to morbidity and mortality, emanated from the world’s physicality, from its embodiment in torpid, inflexible, decaying stuff. The panacea was virtuality—the reinvention and redemption of society in computer code. They would build us a new Eden not from atoms from bits. (Carr 2016, p. xx)

The Valley’s ethos has grown deep roots: Idealistic thinking. Entrepreneurial optimism. The unbounded potential of code. From these roots, business plans grow utopian visions of the future, and entrepreneurs set themselves on the path of pursuing idealistic aims, reshaping the world as necessary along way in order to remove any obstacles to their growth. No wonder Marc Andreessen says, “Software is eating the world.” From the perspective of a software engineer cum venture capitalist, it looks that way.

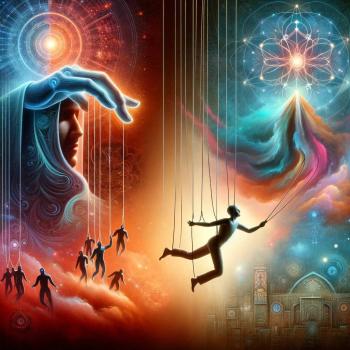

However essential technology is to human existence, and however wonderful the entrepreneurial vigor that births it, tragedy lurks in the cracks that betray utopian visions. Tragedy is indeed the right word to describe the dire, even disastrous outcomes that can flow from the good intentions of well-meaning people who put their trust in the wrong places.

Mr. Zuckerberg’s idealistic vision for the company, his trust in code, and his presumptuous desire to assimilate ever larger swaths of human consciousness without limit, spell tragedy for business ethics.

To understand this tragedy and find constructive paths through it, we need to develop a theological understanding of why cognizance of sin is a necessary hygienic ingredient in any prescription to cure idealistic thinking in (business) ethics.

In future posts, I shall unpack this thesis, express reasons for hope, and suggest some constructive direction forward.