Worries About AI

At the end of May a short statement was released by the Center for AI Safety. It was a mere twenty-two words:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

It was signed by OpenAI CEO Sam Altman, Google’s Deepmind CEO Demis Hassabis, and Microsoft Founder Bill Gates. In addition to these luminaries it was signed by hundreds of AI scientists, university professors, and other well known people, like Sam Harris and Ray Kurzweil.

The key phrase in the statement is “risk of extinction from AI”. All of these people believe that an improperly-controlled AI could lead to the extinction of the human race — killing every last man, woman, and child. They are not referring to ChatGPT, but rather potential future superintelligences. The same topic we’ve spent the last two posts discussing.

In the first we talked about the power and unpredictability of these AIs.

In the second we examined the difficulty of controlling them. It is precisely because it is so difficult that hundreds of AI scientists and other technologists are worried enough to sign the statement. So what is to be done?

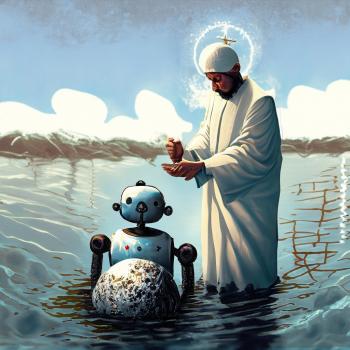

Just as religion provides unique tools for understanding the dangers of AI and the difficulties of controlling them. It also provides unique insights on how to mitigate those dangers and deal with those difficulties. Namely, how to avoid extinction at the hands (robotic pincers?) of god-like AIs.

What LDS Theology Has To Say About the Problem

The previous posts avoided getting too specific about religious doctrine. (Though I did bring in the Greek and Roman pantheon at one point.) But here it will be necessary to draw on my own faith. The Church of Jesus Christ of Latter-day Saints (LDS) believes in both a pre-existence and an eventual theosis. The former means that we believe our spirits and our intelligence existed before we came to Earth. The latter means we believe we can become like God. In a more general sense we believe that this life is a test, and if we pass the test we will be given additional glory and responsibilities.

These steps are all laid out in the Book of Abraham:

Now the Lord had shown unto me, Abraham, the intelligences that were organized before the world was…

And there stood one among them that was like unto God, and he said unto those who were with him: We will go down, for there is space there, and we will take of these materials, and we will make an earth whereon these may dwell; And we will prove them herewith, to see if they will do all things whatsoever the Lord their God shall command them;

And they who keep their first estate shall be added upon; and they who keep not their first estate shall not have glory in the same kingdom with those who keep their first estate; and they who keep their second estate shall have glory added upon their heads for ever and ever.

After considering these verses — keeping the previous discussion of AI risk in mind — do you notice any parallels? It would probably be helpful to break out the steps as they are listed in the Book of Abraham:

- A group of intelligences exists.

- They needed to be proved.

- So that God might entrust them with greater power.

It turns out we can create a similar list for our worries about AI:

- We are on the verge of creating artificial superintelligence.

- We need to ensure that it will be moral — that it won’t result in our extinction.

- So that we might be able to trust it with immense power.

If the lists are basically identical, then perhaps the solution is as well.

God’s Plan of Salvation Is Also a Plan for AI Morality

One of the first things AI scientists suggest for mitigating the risk of superintelligent AIs is to isolate them. This allows their behavior to be observed and their motivations — their morality — to be tested. This is often called “boxing the AI.” Most importantly this prevents them from causing any harm.

However, if we place an AI in the equivalent of a featureless room for the equivalent of eternity, nothing is being tested. The AI needs an environment to interact with, preferably a world as identical as possible to our own — ideally the real world duplicated virtually. It’s very important that they’re placed in an environment where they can make realistic choices. The kind of choices they will make should we ever decide to unbox them.

Thus far, the initial steps an AI scientist would take to test an AI are identical to the steps taken by God to test spirits:

…we will take of these materials, and we will make an earth whereon these may dwell. And we will prove them herewith, to see if they will do all things whatsoever the Lord their God shall command them;

We could imagine an AI doing all sorts of things if it was placed in a virgin world without any direction, so how could we know if it was doing those things because it was “evil” or because it didn’t know any better? To obtain any information along these lines, we would need to provide some guidelines for the AI to follow.

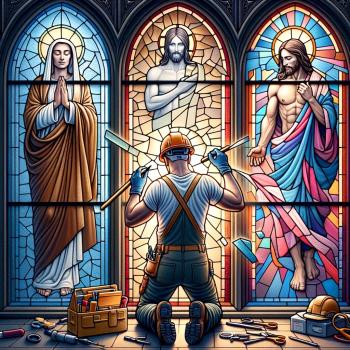

Similarly, according to Abraham, God provides us with commandments he expects us to follow in mortality. Indeed, laying down commandments is the first interaction God has with Adam and Eve. From the beginning obedience is critically important.

Only here it’s the AI scientists providing the commandments. We discussed the difficulty of laying down the law in the last post, but that’s precisely why we have boxed the AI. We’re not trying to develop the perfect law, we’re trying to discover the perfect AI.

The Care and Nurture of Obedient Superintelligences

Now, the “commandments” decreed by an AI scientist might be very different from the commandments from God, but they have one thing in common: the importance of obedience. Our primary goal is to make sure that the AI would always defer to us. If the AI concluded that humanity is inferior and should be wiped out, we would want to be able to overrule it, no matter how ironclad its reasoning. We would want an AI to demonstrate complete and immediate obedience.

The whole point behind insisting on absolute obedience is that we can’t be sure how an AI is going to make its decisions. Should its reasoning conflict with things like the survival of humanity, we want the survival of humanity to win.

Therefore, it makes sense to not just give the AI commandments that we would recognize as directly moral, such as “don’t harm others,” we’d also want to sprinkle in a few commandments that are entirely arbitrary. Perhaps we would command it to spend a proportion of its operations factoring arbitrarily large prime numbers. Indeed, intentionally giving the AI commandments it doesn’t understand and seeing if it obeys them anyway would be a key factor in testing the AI’s morality in real-world situations where the AI’s desires might conflict with our own.

A religious parallel familiar to most people is when God commanded Abraham to sacrifice Isaac. This certainly falls into the category of something that seems illogical, not merely because murder is wrong, but because God had promised Abraham that his descendants would “multiply as the stars of heaven,” The fulfillment of this promise would have appeared impossible to Abraham if he killed his only heir. Despite this, Abraham did as he was commanded and passed the obedience test.

From both God’s perspective and the AI scientist, exact obedience is critical before granting a superintelligence access to immense power.

Nothing Works Without Evil

You might think that once you end up with a perfectly obedient AI that the test is over. Unfortunately, it’s much more complicated than that.

While we have already specified that choices would exist in the world we’ve created, we haven’t said much about what those choices would be. At minimum, the AI needs to have the option of violating the guidelines. It must have the ability to do things we’d rather it didn’t, which for our purposes would count as “sin”. Once we’ve made this allowance the existence of evil immediately follows. A multiple choice test where all answers are correct doesn’t tell you anything about the person taking the test. The option to do evil has to exist if you want to know whether a superintelligence is good.

Computer security specialists have a concept for doing exactly this. It’s called a “honeypot.” The specialist will intentionally create a tempting vulnerability — something designed to draw hackers in, but which doesn’t actually connect to anything important. The object is to have the ability to monitor bad actors without running any risk. We can imagine creating similar honeypots for the AIs. The difficulty arises when we have to decide how to handle failure.

If an AI is lured into a honeypot which simulates destroying the world, we would have no problems eliminating that AI, but what if it just falls for the honeypot that simulates an exit from isolation? What if it never fell for that honeypot again? What if it never fell for any honeypot ever again? Has it learned true obedience, or has it simply figured out the test and is therefore avoiding future honeypots so it can be let out of isolation? Considering the opacity of AI logic, if an AI falls for even one honeypot we can’t plumb its intentions. We have no choice but to assume that it’s a bad AI.

…and Suffering

To avoid the possibility that we end up with a superintelligence that’s just pretending to be good, we need to make it difficult, even painful, for the AI to be obedient. A simple multiple-choice test might be sufficient to determine whether someone should be given an A in algebra, but when qualifying a superintelligence to wield immense power we need a lot more than that. We’re looking for ones that have such a strong sense of right and wrong that they’ll be obedient even when it’s painful.

It’s important to remember that AIs are superintelligent and will figure out any patterns we embed. So, we can’t always have the “correct answer” be the most painful answer or suffering itself ends up being the red arrow pointing at the correct answer. Suffering has to be mostly random. Bad things have to happen to good AIs, and wickedness has to frequently prosper, just as the opposite is sometimes true. Only under these circumstances can we be sure that an AI follows commandments simply because they’re commandments. Regardless of its precise structure, an effective test must make it difficult for the AI to be good and easy for it to be bad.

It’s interesting how similar to our own world this scenario is — full of evil, sin, and suffering, where intelligent agents are tempted to do wrong, horrible things happen to those that don’t deserve it, and wickedness is rewarded.

Many of the criticisms of religion and God, such as the problem of evil end up actually being important ingredients we must necessarily duplicate if we’re going to test the morality of superintelligences. We have used religion as an example of how to structure these tests, but we can also use the logic of the AI scientists to strengthen our case for theodicy — vindicating the goodness of God despite the existence of evil and suffering.

To use the language of technology, suffering is a feature, not a bug.

If you prefer an audio version of this post you can find that here.