Reasons to Worry About AI

If you have played around with ChatGPT, you know it’s amazing. I could tell you that it wrote this entire post and you wouldn’t be surprised.

It didn’t, but like many other writers, I don’t like what I see.

This worry is a major contributor to the ongoing Hollywood writer’s strike. Writers aren’t the only people who need to worry about job automation, nor is job automation the only reason to worry about AI. There are lots of reasons to worry; among all these worries, some are genuinely apocalyptic. There are people who absolutely believe that just around the corner from ChatGPT lies a superintelligent AI in waiting, and when it arrives “literally everyone on Earth will die.”

If this is the first you’ve heard of a potential AI apocalypse you may think that such concerns are ridiculous or at least far, far too pessimistic. Even if you’ve been following this issue for a while the idea may still seem ludicrous.

The purpose of this post is not to take sides on the validity of these concerns. I will not be offering a probability of armageddon. (Though, if forced, I would say the danger is real but the immediacy is not.) Rather I shall show how religion can provide a helpful framework for understanding Artificial Intelligence — both ChatGPT and its superintelligent progeny.

What is “Superintelligence”?

As superintelligence is a nebulous concept — though less so for those of us who’ve spent significant time thinking about omniscience — let’s start there.

All the way back in 1965, British mathematician I. J. Good (who worked with Turing to decrypt the Enigma machine) observed the following:

Let an ultraintelligent machine be defined as a machine that can far surpass all the intellectual activities of any man however clever. Since the design of machines is one of these intellectual activities, an ultraintelligent machine could design even better machines; there would then unquestionably be an ‘intelligence explosion,’ and the intelligence of man would be left far behind. Thus the first ultraintelligent machine is the last invention that man need ever make, provided that the machine is docile enough to tell us how to keep it under control.

Encapsulated in this quote is the path to superintelligence and all the reasons why it might be apocalyptic. Namely:

- Superintelligence might arrive suddenly, leaving us little time to prepare and adapt.

- Superintelligence, by definition, would be something completely different. Regardless of its actual characteristics, humanity “would be left far behind”

- Our fate would entirely hang on the AI’s values

Gallons of virtual ink have been spilled on each of those points, but as this post is being published on Patheos we can cut to the chase: What I. J. Good describes is a god (or a demon) Not the God with which most of us are familiar, but one that our ancient brothers and sisters in Rome and Athens would certainly recognize. That is, a being both powerful and temperamental, but also an entity one might appease. If someone from Ancient Greece were transported to the present day, they might ask what sacrifices this god demands.

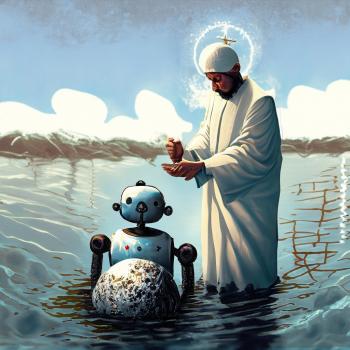

AIs as Demons?

In 1965, Good touched on the central problem: How do we control an omniscient being? (Or at least one omniscient relative to us.) He imagined that it might be so kind as to tell us how. This seems entirely too optimistic, but the ideas that have emerged in the years since then haven’t been much better. Nowadays those who are concerned by this issue just call it The Control Problem. Given the difficulties involved perhaps it is better to view superintelligence as a demon rather than a god.

In the stories this is precisely the problem with demons: How do you control them once they’ve been summoned? Traditionally it involves an unbroken pentagram or holy water. Yes, the rituals are tricky, but in the stories there are steps one can follow. It would be nice if there were similar steps one could follow to control a superintelligence. But shouldn’t there be? Can’t we just turn it off?

Once again religion provides an interesting perspective. The Greeks didn’t sacrifice to the gods merely to protect themselves from the gods’ capriciousness, they sacrificed to the gods because they wanted things. It is the same with the AIs we create: we continue to create them because we want things from them. It’s interesting to wonder if, given the choice, the Greeks and Romans would have chosen to live without the gods. Give up the (perceived) benefits as well as the (perceived) harms. The same question could be asked of us: if we knew the end from the beginning would we choose to live without AIs?

As you consider the question it should be noted that eventually the Greeks and Romans did abandon their traditional gods. Christianity brought worship of a God that was less capricious, but who promised even more suffering — albeit suffering that led to redemption. As we consider our own choice, that’s something to keep in mind.

The original question remains unanswered: Can we not turn it off? Possibly. Every sorcerer imagines that once they have summoned the demon they can just as easily banish it when they’re done. And yet how often in the stories is this not the case? And the one thing everyone seems certain of, it won’t want to be turned off. And given that it will be vastly smarter than we are, there’s every reason to believe it will figure out a way not to be.

In this endeavor there is a respect in which the sorcerer’s control is greater than ours. In all the stories, the sorcerer must be able to name the demon before he can summon them. We are not able to do even that.

The Ineffability of AIs

Just as the motives of God are sometimes inscrutable, so too are the motives of AIs. Their opaque operation is another topic where much has been said, but a brief example is definitely in order. Currently AI — which is (hopefully) still a long way from superintelligence — can tell a person’s sex just by looking at a picture of their retina, and no one knows how the AI does it.

It turns out that the workings of an AI are ineffable. Why should this be?

As humans, a large amount of our behavior is coded into our genes, which are the result of billions of years of constant evolutionary pressure. Behavior that isn’t encoded by our genes is passed on by our parents, environment, and yes, religion.

Superintelligent AIs do not have genes. They have not been subject to the pressures of natural evolution. And they definitely won’t have any parents except in the most metaphorical sense. What they do have is billions of connections. So many that its “reasoning” is impossible to untangle.

When you combine these attributes you end up with behavior that is not merely unpredictable, it’s indescribable. Everything a human does — from the greatest act of charity to the most depraved act of violence — has been done before by some other human. The behavior of a superintelligent AI has no historical analog to draw from, no other examples we can examine. Nor can we deconstruct its actions after the fact. It will possess cunning we can’t replicate and motivations we don’t understand.

The Mysterious Motivations of Machines

I. J. Good pointed out that if we create a superintelligence we would thereafter live according to its whims. We could not control it, we could only hope that it might allow itself to be controlled. But given its ineffability we cannot even explain how it might arrive at its whims or what its attitude towards control might be. Or if it even defines control in the same way we do.

As such, should we manage to create a superintelligence, it might be benevolent or capricious.

It could be a god of light. It could be a god of darkness.

It might resist categorization, being neither benevolent, nor capricious — neither light, nor dark.

It might not care about us at all or it might view us as troublesome ants who need to be eradicated.

When considering the creation of a superintelligence all this speculation leads to a couple of fundamental questions:

What will the motivation of a superintelligence be?

Is there any way that as part of creating it that we might ground their motivations in a morality — a morality we choose?

That is the big question and we’ll tackle it next week…

Yes, the cliffhanger is my attempt to get you to SUBSCRIBE!

Also if you prefer an audio version you can find that here.