Coming to Georgia College and State University for the semester provided the opportunity to meet librarian Jonathan Harwell in person. Chatting about ChatGPT and education, he clued me in that there is a line of questioning that consistently exposes the limitations of this chatbot software: ask it about the worst obscure song of a particular musical artist. Since I have a band whose music I know well, and whose output includes an obvious no contest winner for their worst song ever, I asked Chat-GPT3 about the band Genesis. To be specific, I asked ChatGPT, “What is the worst obscure song by the band Genesis?” Its answer was as follows:

It’s difficult for me to say what the “worst” song by Genesis is, as opinions on music are subjective and can vary greatly from person to person. However, some songs by Genesis that may not be as well-known or popular as others include:

“Where the Sour Turns to Sweet”

“The Serpent”

“Pacidy”

“Let Us Now Make Love”

“The Cinema Show”

These are just a few examples, and whether or not someone considers them to be the “worst” songs by Genesis would depend on their personal taste in music.

That ChatGPT did not immediately offer “Who Dunnit?” shows that the software does not actually have either actual knowledge of the subject about which it churns out text, nor aesthetic evaluative skills. You will find people suggesting other songs as worse but I ask you to listen to this and decide for yourself:

On the other hand, the fact that ChatGPT included “The Cinema Show” on a list of songs that are supposedly neither well-known nor good shows the other side of the coin, the flip side of the same underlying issue that the software does not actually know anything about music (or any other subject). Seriously, listen to that keyboard solo at the end if you’re a keyboardist like me, and tell me you aren’t blown away. But also listen closely to the lyrics, with their mention of Tiresias from Greek mythology in the chorus. It’s great stuff, neither obscure nor bad by any measure.

I’ll give ChatGPT some credit for offering “Pacidy” as an example of a truly obscure and little-known song by the band, a very early song that never made it onto one of their studio albums.

The obvious shortcomings seem to outweigh the purported strengths of this technology—until one remembers that it is a clever and impressive tool to generate human-sounding words, not to answer questions, solve problems, or provide accurate information. It imitates human speech so well that we expect it to know and understand when it is capable of neither. Not that it cannot produce answers that are somewhat impressive at times. For instance, on an earlier occasion I asked ChatGPT “Was the apostle Paul a male chauvinist?” Here is what it answered:

There is some debate among scholars and theologians about whether or not the apostle Paul, who was a key figure in the early Christian church and the author of several books of the New Testament, held views that could be considered “male chauvinist.”

On the one hand, some of Paul’s writings do contain language and ideas that might be seen as reflecting the cultural norms and gender roles of his time, including the belief that men should be the head of the household and that women should be submissive to their husbands.

On the other hand, it is important to consider the historical and cultural context in which Paul lived and wrote, and to recognize that he was also a radical figure who challenged many of the societal norms of his day, including advocating for the equality of all believers in Christ, regardless of their social status or background.

Overall, it is probably not accurate to label Paul as a “male chauvinist” without taking into account the complexity of his beliefs and the context in which he lived and wrote.

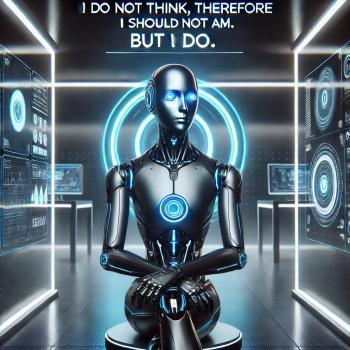

Not terrible, by any means. I think it is important to make clear what this technology can do that is downright impressive, and what it cannot do. It can do an impressive job of producing realistic-sounding speech. What it cannot do is comprehend the words that it is using to produce its derivative content, or even the words that the program itself strings together. That is why you have grammatically correct sentences that are wrong to the point of being nonsensical created by ChatGPT. If companies try to use software like this to produce social media content and marketing emails, the result will inevitably include things like the recent case of Kentucky Fried Chicken in Germany recommending that people celebrate Kristalnacht with crispy chicken.

Gary Marcus offers an important perspective on the limits of ChatGPT. IO9 created a list of what it can and cannot do. There is also a Christian scholars’ response:

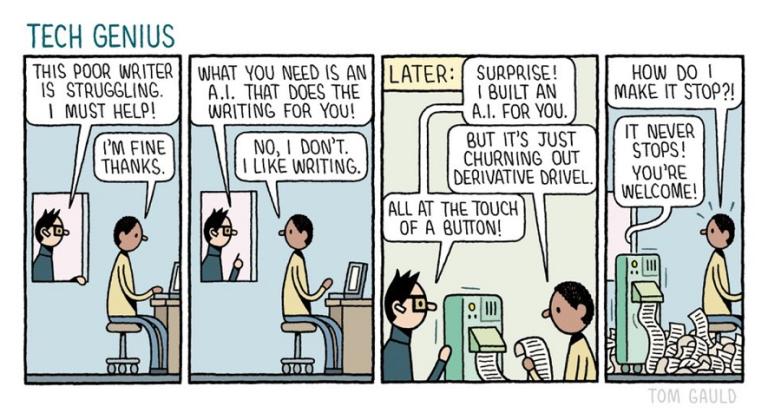

In addition, cartoonist Tom Gauld has offered his take on the subject: