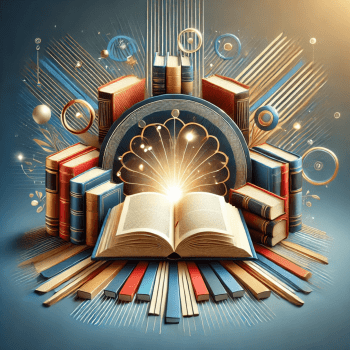

I have posted previously about the bestselling book Sapiens: A Brief History of Humankind by Yuval Harari, a professor of world history. His book wrestles with how we humans reached our present state. To take just one data point, a mere 150,000 years ago, there were approximately one million humans alive on earth. Today there are more than 7.3 billion of us, with more than one new human being added to the total each second! So what does the future hold? Will we continue to grow in number and power as a species—in ways that increase wealth inequality, exacerbate climate change, and hasten extinction rates of various plants and animals—or will we also grow in wisdom and responsibility?

As part of answering that question, Harari has released a sequel titled Homo Deus: A Brief History of Tomorrow. The Latin name for our species is homo sapiens, meaning “wise human.” Harari’s title “Homo Deus” means “godlike human,” and invites us to reflect on the ways that our increasingly godlike powers as a species have both tremendous promise for good and terrible potential for harm.

As part of answering that question, Harari has released a sequel titled Homo Deus: A Brief History of Tomorrow. The Latin name for our species is homo sapiens, meaning “wise human.” Harari’s title “Homo Deus” means “godlike human,” and invites us to reflect on the ways that our increasingly godlike powers as a species have both tremendous promise for good and terrible potential for harm.

One of the major trends that Harari foresees is what he calls “Dataism”—in effect, an emerging “religion of data.” Some of you may recall my previous post on “What Is Religion?” One of the many definitions of religion explored was from the twentieth-century theologian Paul Tillich, who argued that something is a religion if it is the “ultimate concern” of an individual or group. From that perspective, Harari is correct that the growing fervor around data collection, management, and analysis is increasingly religious for adherents of what is sometimes called the Quantified Self Movement (336).

Fitbits, for example, are one among many wearable devices that collect data including “number of steps walked, heart rate, quality of sleep, steps climbed, and other personal metrics involved in fitness.” Those of you with iPhones may or may not know that that if you click on that heart icon on your home screen that says “Health,” Apple is collecting as much as possible of that same data about you—depending on how much you carry your iPhone. (They are definitely tracking it if you have an Apple Watch, which is both quite useful and ripe for exploitation.) I suspect there are equivalent functions on Android devices.

Alluding to George Orwell’s dystopian novel 1984, some of you may recall that back in 1984, Apple launched a Super Bowl advertisement for the Mac, which declared, “1984 won’t be like ‘1984.’” The ad’s subtext was that Apple computers were “freedom” from Big Brother—the IBM PC. Ironically, Apple may be one of the new Big Brothers.

To say more about both the potential promise and peril of personal data collection, consider a study that Facebook recently conducted on more than 86,000 people. The same hundred-item personality questionnaire was completed by (1) individuals about themselves; (2) “work colleagues, friends, family members and spouses” about that individual; and (3) a Facebook algorithm about that individual. Then the results were compared:

The Facebook algorithm predicted the volunteers’ answers based on monitoring their Facebook Likes—which webpages, images, and clips they tagged with the Like button…. Amazingly, the algorithm needed a set of only ten Likes in order to outperform the predictions of work colleagues. It needed seventy Likes to outperform friends, 150 Likes to outperform family members, and 300 Likes to outperform spouses. In other words, if you happen to have clicked 300 Likes on your Facebook account, the Facebook algorithm can predict your opinions and desires better than your husband or wife! (345)

I have been on Facebook more than a decade, and I would guess that I contribute well more than 70 likes most weeks. I get a lot of benefit from Facebook—from increased connections with friends, family, and colleagues to increased awareness of trends of both personal and political interest. But we all need to be increasingly aware of the snowballing implications of the information many of us are giving away.

To name just one among many implications, that Facebook study “implies that, in future U.S. presidential elections, Facebook could know not only the political opinions of tens of millions of Americans, but also who among them are the critical swing votes, and how these voters might be swung” (346). As some of you may have seen in the news, for the past few months, Mark Zuckerberg, Facebook’s co-founder and Chief Executive Officer, has been in the process of visiting all fifty U.S. states. During his visits, he’s been doing very similar activities as candidates for political office. He may have nothing more in mind than better understanding of the many different lives of Facebook users across our country in order to improve his product. Or maybe he is planning to run for President. I don’t know either way. But I invite you to consider this quote from Harari, which lands somewhere between the flippant and the profound: “In the heyday of European imperialism, conquistadors and merchants bought entire islands and countries in exchange for colored beads. In the twenty-first century, our personal data is probably the most valuable resource most humans still have to offer, and we are giving it to the tech giants in exchange for email services and funny cat videos” (346).

(I will say more in my post tomorrow on “Why Robots–Not Immigrants–Are Coming for Your Job: The Promise & Peril of Dataism as Religion.”)

The Rev. Dr. Carl Gregg is a certified spiritual director, a D.Min. graduate of San Francisco Theological Seminary, and the minister of the Unitarian Universalist Congregation of Frederick, Maryland. Follow him on Facebook (facebook.com/carlgregg) and Twitter (@carlgregg).

Learn more about Unitarian Universalism: http://www.uua.org/beliefs/principles