My father is an engineer. His job is, he works designing, testing and trouble-shooting airplane engines. Ever since childhood I’ve had the basic concepts in engine design explained to me multiple times. It has something to do with air being sucked in at high velocity and emerging from the back of the engine, creating thrust. There are sometimes propellers involved in this process, and lots of fans, and the word “torque” may or may not have appeared in my father’s explanations.

Basically, I do not understand airplane engines. I never took physics in high-school. I quit math after grade eleven. The fact that I’ve had them explained to me literally dozens of times by a senior engineer at Pratt and Whitney does not make up for my near-total ignorance of the fundamental principles of engine design.

If you are ever looking for advice on what kind of engine you should buy for your shiny new airplane, you should not come to me. I would tell you that you should buy from Pratt, on the obviously somewhat biased basis that my father works there. My opinion, if I managed to develop one, would be more or less completely worthless.

Most of us understand that if we are more or less completely ignorant we are unable to offer useful advice or critique when it comes to disciplines like engineering, brain surgery, hydroponic gardening, or the intricacies of the Sudanese legal code. We don’t feel entitled to an opinion about these things, and we don’t feel insulted or belittled if we have to admit that we’re unqualified to form one. Nor do we feel like it’s a slight to our intelligence if we have to admit that.

But there are other disciplines (politics, psychology, economics, even medicine or, more recently, canon law) where people are more accustomed to not only form and hold opinions, but also to insist that we have a right to those opinions – no matter how ill-informed they are.

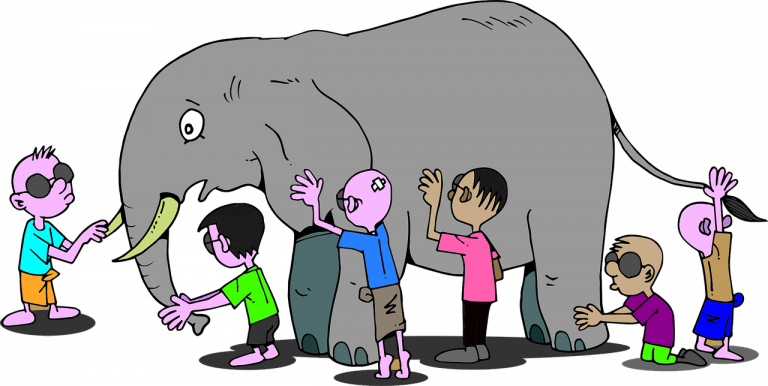

There are, I think, a couple of reasons why this happens – and knowing what they are can be valuable if you want to avoid being a poster-child for the Dunning-Kruger effect.

Games of Truth

Last fall, I was chasing my kid around the park and there was a group of boys playing basketball and talking about chemistry. One of the kids was clearly really interested in it, and he was opining about the boiling and sublimation points of different substances, and how that might effect their performance in various sci-fi type scenarios. It was clear that he had been memorizing data off the periodic table, and that his opinions were basically a means of playing with the data and turning it into active knowledge (this is, incidentally, how smart people remember stuff – by using information instead of just memorizing it.)

Developing opinions, then, is actually an important part of knowledge-play. Whenever a person hits a new plateau in their understanding of something they are likely to cement the new information by forming opinions about it. Later, as they progress, they’ll get to the point where they realize that their understanding is still radically incomplete, but when we’re first learning something it’s actually important for us to have confidence in the knowledge that we acquire.

This is part of why there isn’t a polite way to tell an opinionated person that they don’t actually know what they’re talking about – psychologically, it’s similar to telling an enthusiastic child that their fabulous macaroni tower is not going to revolutionize the field of architecture. The feeling that we’ve mastered something is an important part of our motivation for learning.

The problems arise when a person starts recruiting other people to live in their Kraft Dinner high-rise; when they don’t realize that they are playing with knowledge, not expounding truth. It’s okay for a five year old to think that they are genius, because outside of children’s television, nobody ever puts a five year old in a position to influence real-life decision making. Once people reach adulthood it’s important to temper that enthusiasm for new knowledge with a healthy dose of humility towards truth.

Manufactured Confusion

One of the drawbacks of democracy is that it is often in the interests of powerful actors to convince people that they know more than they do about controversial political and economic situations. You need to build consensus around a particular point of view in order to enact legislation, and this means mobilizing large numbers of basically ignorant people to advocate for a particular position.

Often folks who have agendas will use propaganda to create the illusion that particular facts have been “proven,” and that specific courses of action follow from these alleged truths. By using expert testimony (often from ‘experts’ who are outliers in their fields), citing dubious research, and throwing around a lot of jargon, it’s easy to make people believe that they have been educated when in fact they’ve just been manipulated.

Of course, it’s not only politicians who create false certainties in this way. Advertisers, activists, religious authorities, and even ambitious academics may stand to benefit by convincing you that their ideas are built on a solid foundation of demonstrable fact… especially when they are actually quite flimsy.

There are a couple of ways to guard against this kind of manipulation. One is to make sure that you are getting your information from multiple sources with different perspectives. If a particular source claims that it is the only reliable authority and that everyone else is lying, that should always be a red flag. Absolute or grandiose claims are also problematic: one of the most reliable ways to tell when someone actually knows their stuff is that they will readily admit their knowledge is provisional, incomplete, and imperfect. Think of the difference between Thomas Aquinas who knows that the Summa is “straw,” and an internet Thomist who thinks it is Immutable Truth.

Finally, follow up citations. Does the study or document being cited actually say what your source claims it says? Is it being cited out of context? Does the link go to an actual study, or only to an abstract (someone citing an abstract probably hasn’t read the study)? Are they citing solid peer-reviewed research in a reputable journal, or does the link go to a source that is designed to look credible but that is actually only representing a single, narrow agenda? Remember that dishonesty in advertising is often quite insidious: there are organizations and journals out there that deliberately mimic reputable sources in order to gain credibility.

While it’s normal for journalists and opinion writers to make mistakes occasionally, if links consistently lead to problematic sources this is a good indicator that either the writer is producing propaganda or they aren’t being very diligent in their research.

Nobody Knows, Anything Goes

Ironically, the more complex and difficult a field of study is, the more likely it is that we will feel entitled to a completely uninformed opinion about it.

Part of the reason why most of us don’t feel qualified to have opinions about airplane engines is that they are actually relatively simple compared to, say, consumer behaviour or the causes of the Peloponnesian war. A limited number of factors, most of which are known to an acceptable degree, determine whether an engine will lift a mass of airplane shaped metal into the air and keep it there all the way to San Francisco, or not. If you don’t know what these factors are, or how to account for them, it’s pretty easy to demonstrate that you don’t know enough to engineer airplane engines.

Simpler systems also allow for a higher degree of falsifiability. If someone claims to have invented an engine that uses air for fuel, produces no waste products and is cheap and easy to produce, it’s relatively easy to figure out whether they are lying or not: you get an engineer to take a look at their blueprints. If they can’t produce the blueprints because they were “stolen by the CIA” or are being “suppressed by Big Oil” you can comfortably ignore them: most likely they are delusional, but if not it makes no difference unless you happen to be in a position to wrest these revolutionary plans back from the hands of the Illuminati.

When a system is really complex, as is usually the case in the human sciences, people who have spent their entire lives studying the questions often radically disagree. Nobody really knows, but lots of people have theories that they are passionately attached to.

In such cases, the lack of disagreement at the top can lead to the illusion that any theory is just as good as any other and that any claim to superior knowledge is basically “elitism.”

This is frankly silly. The more complicated an issue is, the more you need to know to have an opinion on it that even has a chance of being useful, much less true. If someone has read half a dozen articles on the internet and thinks they’ve solved a problem that has confounded the best minds in the field for centuries, or even millennia, it is guaranteed that their opinion is both useless and false. Nobody has a “right” to mindlessly cling to their beliefs when they are wrong.

This doesn’t mean that we can’t discuss or debate these matters, even passionately, because discussion and debate can be a great means of educating ourselves and others. But we should have the humility to recognize that what we are doing is learning and exploring ideas, and that the most likely (indeed the ideal) outcome of this is that we will have to modify our position in light of whatever facts the other person possesses that we lack.

If the debate has ground down to the point where your only move is to assert a “right” to your opinion, what that really means is that your opinion needs more development. It’s time to say, “Thanks. This has been a great discussion, and you’ve given me a lot to think about.” And then, go think about it, confident in the knowledge that when the best minds have yet to come up with an answer, there is literally zero shame in being wrong.

Image credit: pixabay

Stay in touch! Like Catholic Authenticity on Facebook: