Sometimes my class on Religion and Science Fiction seems to be incredibly practical, despite the impression the title might give.

Yesterday we talked about whether it would be a good idea to ask an artificial intelligence whether God exists.

The conversations we had were great, and included a contribution from Siri which led to one student being dubbed a “telephone evangelist.”

Some of you may remember me blogging about a movie I saw in 2014 at Gen Con called “The God Question.” You can purchase and view it on Amazon. It is about precisely this question: what would happen if a supercomputer were set to work on the question of whether God exists? Here’s the trailer:

Towards the end of class, I talked about upcoming topics. Next week one of the subjects is whether it would make sense for us to hand over the running of our society to a machine programmed to act in our best instance. Sci-fi stories such as “Return of the Archons” from the Original Series of Star Trek suggest that the answer is no. But the present election makes it seem like a more real issue, and so I think I’m going to ask students not just to consider the question in the abstract, but to discuss – and perhaps even role play a debate – that adds a robot as a third candidate alongside Donald Trump and Hillary Clinton. Who would they vote for, and why.

Who would you vote for, and why?

It seems I’m not the only person exploring this question, and so here are some depictions of robots as president or candidates:

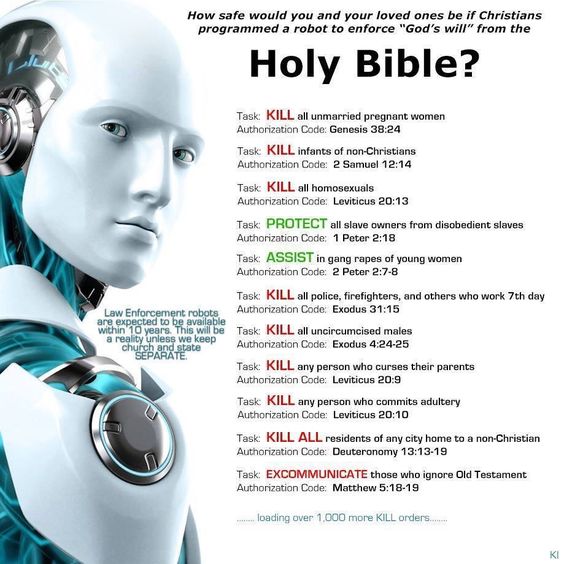

We will also be talking about whether rules like Asimov’s Three Laws of Robotics can keep us safe, and that is interesting to think about in conjunction with this image, which is not without its problems (to say the least), but nonetheless clearly sits at the intersection of religion and science fiction…