In the recent thread on human-independent morality, Ash had a question for me and Adam Lee:

I’m curious if you both consider morality in the way that Harris and Carrier do, as a means to achieve maximum well-being and minimum suffering. Speaking for myself, this is the only way I can see morality being objective. I agree with Harris that there might not be any one single moral “code” that can achieve a given end in this framework, but that one can nevertheless describe all such codes as either promoting or preventing well-being, which is an objective question.

So, one of the biggest problems I had with Harris when I read The Moral Landscape is that defining moral codes as good or bad insofar as they promote well-being doesn’t actually help me categorize them. Unless I’m a Dark Kantian, ‘well-being’ is exactly what I’m trying to define when I’m trying to pick moral actions, so saying I should pick moral systems that maximize it is tautological. If there were ever a phrase that needed to be tabooed…

So if you want to start defining well-being, you need to start by talking about your metric. Is it dopamine release and subsequent subjective feelings of pleasure? Congratulations! Aldous Huxley will meet you with your dose of soma behind Door Number One.

Are our subjective preferences untrustworthy since we can honestly want something we don’t really want? Probably! Is this a defeater for living by objective moral codes? Not necessarily. After all, our perceptions of the physical world are unreliable and our intuitions need revamping. Our causal reasoning is arguably worse, but we still believe we can fumble our way to a less wrong map of the world around us. We hope that we can identify and compensate for our moral blind spots just as we do for other built-in errors.

But, in moral matters, the difficult question is how we notice when we get caught in an error. There’s no test you can fail as easily as the buying a lottery ticket/not clicking your seatbelt error for gauging risk.

Since I’m a virtue ethicist, I would say that one marker of a bad moral choice is if it makes you feel less attached to other moral opinions you previously thought of as very important, particularly if you feel less attached to your old principles because they would damn your recent actions. I’d also tag as questionable any choice that puts your further away from recognizing the consequences of your actions as attributable to you. (i.e. any decision that leads you to say “Well, this way my hands are clean” isn’t necessarily a bad choice, but it merits increased scrutiny).

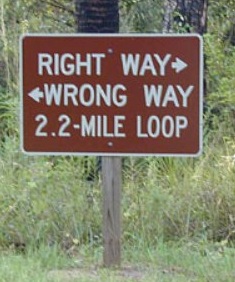

When it comes to moral philosophy, there seems to be a strong preference for a Grand Unified Theory. I am frequently told by atheist and Christian friends that my views on morality would be more logically consistent if I converted and were able to say something like “the telos of humans is to become Christlike” instead of “the telos is to develop and improve character in a lot of complex ways that are hard to synthesize into a general rule that remains a helpful guide in a lot of situations so I calls ’em as I sees them and try to backpropogate wherever I can.”

It’s one thing for Christians to prefer the simpler formulation, since they also believe the underlying metaphysics are true, but I find it infuriating when my nonbelieving friends think I should pick a simpler system that I don’t have confidence in over the hodge-podge of specific rules I do find compelling. I shouldn’t get off the hook, but it seems unreasonable to praise people for adopting very unified systems that seem to give wrong results (like objectivism or nihilism) instead of admitting there’s still work to be done.

Physicists haven’t got to a Grand Unified Theory yet, and their profession has a good deal more consensus on what evidence looks like than philosophy does.