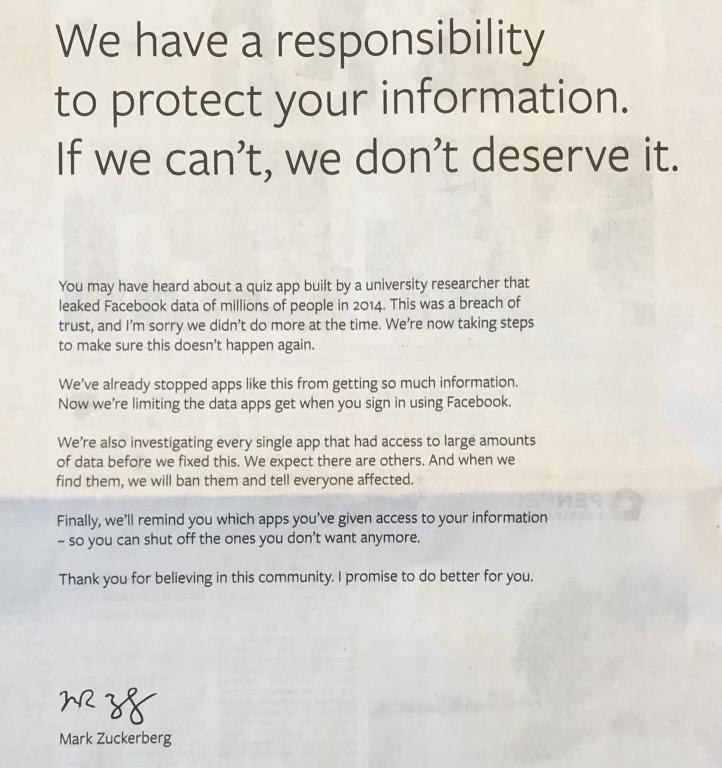

Fake news is as old as politics (which is as old as humankind), but the problem got a lot worse during the 2016 elections. This was a key issue for Facebook CEO Mark Zuckerberg when he faced congressional inquiry earlier this year.

Mr. Zuckerberg assured Congress that his company would and could fix the fake news problem with A.I., if not today, then at least during the next five to 10 years. This sounds like a “fight-fire-with-fire” strategy. If technology is fueling the problem, then why not use technology to stop it?

In spite of Silicon Valley’s best efforts however, A.I. does not seem to be winning the war. Fake news continues to spread and cause problems in elections and hateful conflicts. Indeed, A.I. may be making the problem worse by broadening the reach of social networks like Facebook and WhatsApp. Mr. Zuckerberg had an answer for this also at the congressional hearing—he called it an “arms race.” Katie Harbath, Facebook’s director of global politics and government outreach explains: “This is really going to be a constant arms race. This is our new normal. Bad actors are going to keep trying to get more sophisticated in what they are doing, and we’re going to have to keep getting more sophisticated in trying to catch them.”

Obviously, Facebook is not to blame for bad actors. Facebook just happens to be the biggest force on the front lines at this moment in the battle. Facebook did not create the problem of fake news, but since they are leading the advance of technology, their technique and strategy are worthy of study.

Facebook’s problems have continued to pile up the fast few months. Their stock peaked and then dropped over the summer, and as it became apparent they were not winning the war against fake news, and as the current election season heated up, they announced the creation of a “war room” in mid-September. This announcement did little to stop the slide in stock price or produce any wins in the war, so the company decided to hold an open house and invite journalists to see the war room first-hand.

It became clear immediately that Facebook had taken steps to “up their game” in the fight, and had filled the war room to capacity with a (not-so) secret weapon. What did visitors find in the heart of the war room? Souls. Dozens of them. Facebook has brought in employees from 20 teams company-wide to staff the war room 24/7. A picture of Facebook’s war room in 2018 bears a resemblance to Churchill’s war room in 1943—filled with humans deciphering data, and aided by the best technology money can buy.

Gary Marcus and Ernest Davis conclude that AI won’t be able to distinguish truth in news reports for at least several more decades to come, because, “existing AI systems lack a robust mechanism for drawing inferences as well as a way of connecting to a body of prior knowledge.” For the time being at least, we cannot trust AI to sort out truth from falsehood, or partial truth from lie. Those fine distinctions require human gifts of perception—a finely nuanced understanding of innuendos, motivations, and hidden agendas.

I admire Facebook’s recognition of the need to rely on human powers of perception, aided by A.I. As for Zuckerberg’s claim to fix fake news within five to 10 years, that calls for skepticism: first of all, because of the self-serving aspect of his claim. I am not questioning his sincerity or authenticity. He wants to believe it, of course. In claiming to be able to develop an A.I. solution, he remains consistently true to his own personal worldview. His claims are grounded in idealism—the idealistic belief that he and the company he owns will prove themselves capable of channeling all intelligence and thought through their proprietary company pipes, gates, and filters. Furthermore—and here is the crucially important key to such idealism—this worldview rests on the belief that he and his company are good, well-intentioned, and without sin.

Eduardo Ariño de la Rubia is a Facebook data scientist working in this arms race who sounds more like a realist. He says, “A.I. cannot fundamentally tell what’s true or false—this is a skill much better suited to humans.” His boss, Zuckerberg, ever the idealist, believes it is just a matter of time however, until Facebook’s algorithms are good enough to do so. The good news, at least from Facebook’s point of view, is that in the foreseeable future, we will be able to trust Facebook to feed us the unadulterated truth without need for any other sources.

The contrast between realism and idealism in these perspectives points to the need for a more thoughtful approach to ethics. A.I. is a powerfully good tool, but it is neither a cure for bad actors nor a corrective for the self-serving tendencies of the human heart.