At CTNS (Center for Theology and the Natural Sciences) in Berkeley, Braden Molhoek and colleagues are working on a Templeton funded research project dealing with AI and Virtue (Molhoek 2025). Christopher Reilly at ITEST (Theological Encounter with Science and Technology) in contrast, has just published a new book, AI and Sin. Well, virtue and sin do waltz together, don’t they? Let’s take a look at Reilly’s new book in light of our need to adumbrate the various relations between AI and Faith.

AI and Sin + AI and Virtue

Our research questions in Berkely are two: (1) can AI itself become virtuous? And (2) can AI aid a human person in becoming virtuous. Reilly asks one question. What is that question? Here it is: does the presence, development, and use of AI motivate sinful human action? Reilly’s answer: yes (Reilly 2025, 4). AI is not inherently evil. Its evil is solely the product of personal human usage (Reilly 2025, 7). Well, sorta.

“There is something particularly concerning that is going on with AI technology. The risk of doing evil actions is clearly enhanced with AI” (Reilly 2025, 11).

If AI like other tools is morally neutral, what could be “particularly concerning”? Reilly answers: the rapture. By “rapture” he refers to a spiritual symbiosis between the machine and its user.

“The user and developer [of AI] are enraptured by the technologies’ enabling power as well as the appearance of spiritual – even magical – effect, and therefore imagine a definition of goodness expressed in the same information-laden terms used to describe the technology and its machines” (Reilly 2025, 16).

More.

“Persons’ experiences with robot machines have been shown to generate hostile feelings and actions, including antisocial behavior toward other human beings” (Reilly 2025, 113).

What is the root cause of such sin?

The Sin of Acedia

Reilly goes straight to what he deems the most relevant of the Seven Deadly Sins, acedia (ακεδια). Here is the book’s thesis.

“The aim of this work is to demonstrate plausibly that AI proliferation motivates the vice and sin of acedia through the intermediate factor of instrumental rationality” (Reilly 2025, 170).

When we think of acedia we typically think of apathy or sloth. For Reilly, acedia is characterized by depressed idleness and an anxious inability to be at rest either physically or spiritually (Reilly 2025, 22). Acedia retards our ambition to engage in acts of charity.

AI inspired acedia will lead to a lower capacity to engage in complex thinking because our thought will be reduced to instrumental reasoning. In schoolwork, ChatGPT will lead to plagiarism. As parish priests and pastors ask LLMs to write their homilies, the sharpness of Christian faith will be dulled (Reilly 2025, 155). The antidote to acedia is stability accompanied by guardrails regarding our relationship to the AI temptation (Reilly 2025, 181).

Of greatest concern to Reilly is that acedia can lead to personification. Just as children personify their Teddy Bears, fiction writers and cinema directors personify robots. “One of the dangers of AI that may lead in the direction of acedia is anthropomorphism,” writes Reilly.

What’s wrong with anthropomorphism? It “is the tendency (perhaps a naturally ingrained one) to perceive and relate to a non-human object as if it were human” (Reilly 2025, 105). We mistakenly believe that machine intelligence understands information and, thereby, understands us. But, nobody is at home in a robot or chatbot. “To suggest that a LLM knows or understands anything is to make a category error: attributing a mind-centered process to an artifact that does not have a mind” (Reilly 2025, 111).

My Question to Father Justin

Christopher Reilly reminds us of the misadventure with Father Justin. Recall how cartoon priest, Father Justin, appeared on Catholic Answers as a chatbot to catechize and teach. Even with a thousand users per hour, the outcry by April 2024 about the creepiness of such a chatbot experience led to a reversal. Catholic Answers responded by temporarily removing Father Justin from their website. But Father Justin is back by popular demand.

I asked Father Justin about acedia and received the expected answer. But Reilly would not approve due to “the dangers of anthropomorphism…is not a real person” (Reilly 2025, 112).

Religious AI as Our Teacher

Despite Christopher Reilly’s demure, some religious leaders are finding spiritual value in AI enhancement. Marco Schmid at St. Peter’s Chapel in Lucerne, Switzerland, relies on a Chatbot for Jesus to teach us how God loves both angels and humans and even blesses one team in a pool tournament. The Digital Future working group of Sinai and Synapses employs Sefaria, a well-organized digital library of Jewish sacred texts. Muslims similarly find they can rely on digitization of sacred data for enhancing spirituality. According to Majd Hawasly at Qatar Computing Research Institute, alignment is requisite.

“The quality and purity of the data used are paramount, that is, being free from biases, misconceptions and harmful content to ensure that AI produces responses that are in line with human values, ethical standards and Islamic teachings.”

With this in mind, we might suggest a reformulation of Reilly’s fear: AI will lead us into specific sinful behavior if we align machine intelligence to our pre-existing acedia. AI does not establish acedia. Rather, AI provides the slothful person with a specific form of expressing acedia.

The moral question asked by most ethicists is this: to what should AI become aligned?

Alignment with Sin

When in 2023 Silicon Valley techies went public with their fears of an AI takeover and the possible extinction of Homo sapiens, they mitigated their fears by relying on the principle of alignment (Peters 2025). Google’s Godmother of AI and co-director of the Stanford Institute for Human-Centered AI, Fei-Fei Li, voiced confidence in the future of AI as long as guardrails keep AI in the status of a tool aligned with human values.

But let’s ask: which human values? Malevolent actors in control of AI are ready and willing to steer the power of this technology in the direction of cybercrime, dominance, and violence. The values to which Fei-Fei Li wants AI alignment are those which contribute benevolently to human well-being. In short, the human race needs to make a fundamental moral decision before alignment is established.

Some malicious actors have already made that decision. They are ready and eager to pull the trigger to flood social media with automated cyber-attacks, deep fake images, revenge porn, fake news, sway elections, and drain our bank accounts. Should the present generation of AI techies distribute the digitized equivalent of AR-15s to malefactors who are ready to deceive, rob, pillage, and destroy?

The public theologian must ask about AI alignment with sin, just as Christopher Reilly reminds us. But, on my list of sins, are many more dangerous possibilities than plagiarized homilies. Here are two biggies: bias and weaponry.

AI and Sin: Bias?

If our ethical aim is to keep AI aligned with human values, does this imply values that are prejudiced, destructive, and dehumanizing? In developing AI through Large Language Models, for instance, might nearly invisible bias become inculcated within AI information? Bias in. Bias out.

“AI can help identify and reduce the impact of human biases, but it can also make the problem worse by baking in and deploying biases at scale in sensitive application areas” (Manika, Silberg and Presten 10/25/2019).

AI and Sin: Weaponry?

Lethal Autonomous Weapon Systems (LAWS) provide another obvious example. Some armies have already launched autonomous lethal weapons on land, underwater, and in the sky. Once released, there’s no calling them back. And sometimes they kill untargeted people. Dehumanized machines are ready to kill humans with no compunction or remorse.

What obtains for offense can also obtain for defense. Anduril executive Palmer Lucky contends that by employing autonomous systems at scale for defense we can prevent World War III. At first glimpse of an attack, the defending allies would automatically release a fleet of autonomous drones to intercept incoming bombers and missiles. Unmanned submarines, stealthy warships, and pilotless fighter planes in coordinated swarms could overwhelm any aggressor. All systems would be latticed together by AI.

Conclusion

Acedia may bore us. LAWS may kill us. Both should appear on any list of AI enhanced sins.

Patheos H+ 2009 AI and Sin 3

Patheos H+ 2001: Is ChatGPT Intelligent?

Patheos H+ 2002: AI Warning: Utopia or Extinction?

Patheos H+ 2003: Your Robot Pastor is Here

Patheos H+ 2004: AI Brinkmanship

Patheos H+ 2005: Is AI a shortcut to virtue? To holiness?

Patheos H+ 2006: Creating an AI Co-Creator? Philip Hefner’s Mind

Patheos H+ 2007: Hopes and Hazards of AI

Substack H+ 2008: The Two Scares of Superintelligence

▓

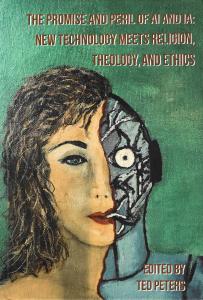

Ted Peters posts articles and notices in the field of Public Theology. He is a Lutheran pastor and emeritus professor at the Graduate Theological Union. His single volume systematic theology, God—The World’s Future, is now in the 3rd edition. He has also authored God as Trinity plus Sin: Radical Evil in Soul and Society as well as Sin Boldly: Justifying Faith for Fragile and Broken Souls. In 2023 he published. The Voice of Public Theology, with ATF Press. This year he has published an edited volume, Promise and Peril of AI and IA: New Technology Meets Religion, Theology, and Ethics (ATF) and along with Arvin Gouw an edited collection, The CRISPR Revolution in Science, Religion, and Ethics (Bloomsbury 2025). See his website: TedsTimelyTake.com.

▓

References

Manika, James, Jake Silberg, and Brittany Presten. 10/25/2019. “What to do about the biases in AI?” Harvard Business Review https://hbr.org/2019/10/what-do-we-do-about-the-biases-in-ai.

Molhoek, Braden. 2025. “Refections on the Virtuous AI Project.” In The Promise and Peril of AI and IA: New Technology Meets Religion, Theology, and Ethics, by ed. Ted Peters, 473-491. Adelaide: ATF Press.

Peters, Ted. 2025. The Promise and Peril of AI and IA. Adelaide: ATF; https://atfpress.com/product/the-promise-and-peril-of-ai-and-ia-new-technology-meets-religion-theology-and-ethics/.

Reilly, Christopher. 2025. AI and Sin: How Today’s Technology Motivates Evil. New York: Enroute. https://enroutebooksandmedia.com/aiandsin/.