If you look up the word “apocalyptic” in Wikipedia, you’ll read: “from the word apocalypse, referring to the end of the world.” If you look it up in the Oxford English Dictionary, you’ll read: “Of or pertaining to the ‘Revelation’ of St. John” and “Of the nature of a revelation or disclosure; revelatory, prophetic” for the first two senses. In the current online edition, you have to scroll down to “Draft additions March 2008” to get to something comparable to Wikipedia’s definition: “Of, relating to, or characteristic of a disaster resulting in drastic, irreversible damage to human society or the environment, esp. on a global scale; cataclysmic.”

In the popular imagination, the apocalyptic imagination is rather dark. There is a basis for that. In the ancient world, apocalyptic literature was the literature of the oppressed: When the emperor is reading your mail and persecuting you, you might write in coded imagery about a radically different, disruptive, but desirable future.

And radical hope for the future is the great secret and power of the apocalyptic imagination.

In After the Dark, a film which dramatizes a thought experiment about surviving a global nuclear event, the teacher who has led his students through this exercise says to one: “Do you know what ‘apocalypse’ actually means? … It’s from the Greek, apocalypsis, meaning to uncover what you couldn’t see before—a way out of the dark.” This definition has some etymological truth in it: an apocalypse is an uncovering, a disclosure, a revelation. But it includes a gloss—“a way out of the dark”—which is a true interpretation of how apocalyptic literature initially functioned: as imaginative explorations of better worlds and realities.

When we speak about artificial intelligence, we often speak in reductionistic “end of the world” apocalyptic language. Dystopian fear overwhelms utopian hope.

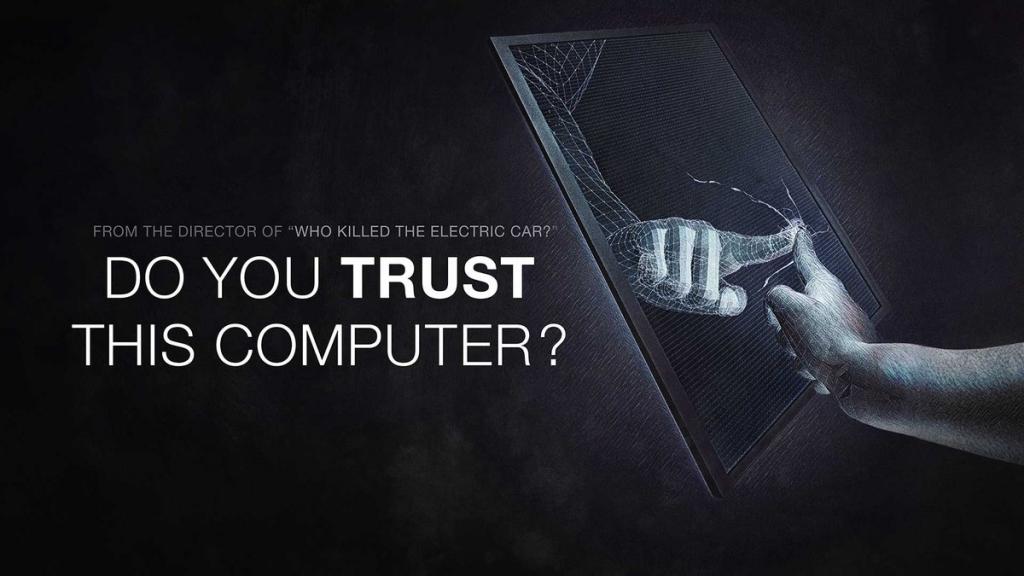

An exemplar of this form of apocalypticism permeates Do You Trust This Computer?, a “troubling” documentary on AI.

The dark bias of the film is evident from the beginning. The opening epigraph is from Mary Shelley’s Frankenstein: “You are my creator, / but I am your master …”

Which is followed by a series of statements about what we should fear. To stimulate our imagination, we see a Terminator foot crushing a human skull, HAL’s perfunctory apology, and a scene from War Games.

Some seemingly good things are mentioned: autonomous cars, health diagnoses, extended intelligence and lives, and Google search. But statements quickly follow about ignorance and surprises about how machines learn. There are questions about trusting artificial forms of intelligence and speculations about machines displacing humans. Jobs will be lost (even good ones). Economic disparity will increase, leading to class warfare. We’ll fall in love with robots, but they’ll kill us (with autonomous weapons) or just manipulate us (with computational propaganda). AI will achieve dominance on the planet and throughout the universe.

Time for Morpheus to school Neo on when dystopia emerged out of utopia: “some point in the early twenty-first century all of mankind was united in celebration. We marveled at our own magnificence … as we gave birth to AI.”

Discussion then slips from human misuses of AI (e.g., all the disinformation and manipulation Facebook has facilitated) into the divinization of AI as a more intelligent, powerful, and teleological force. And the documentary ends with Elon Musk saying we must either merge with AI—an immortal dictator from which we can never escape—or be left behind. The film’s final image is of people looking at computer screens (trustingly) in a coffee shop. It’s final quote is: “The pursuit of artificial intelligence is a multi-billion-dollar industry, with almost no regulations.”

In her review of the documentary, Lauren deLisa Coleman asks if it provides “the striking narrative needed to boldly move the needle within the AI arena.” She concludes it does not. “It seems the filmmakers’ objective is paralysis by fear.” Which isn’t all that helpful: “One cannot reach people today just based on general mayhem because, unfortunately, this is now the everyday occurrence. … [the] social movements that the film seems to be trying to spark typically need to be tied to a specific cultural narrative in order to take hold, and it does not.”

Do You Trust This Computer? is a reductive application of the apocalyptic imagination. Instead of seeking to inspire our imagination—stimulating our attention to consider the potential of human creativity and our agency to participate in our destiny—we are simply presented with familiar images of terror and left in a narrative vacuum.

At its best, the apocalyptic imagination provides a robust conceptual and narrative framework for confronting fears and meeting these with greater hopes. It comprehensively confronts the realities—and limits—of human knowledge, space, time, and agency. And it seeks something more: a better future we can create, but also a future that comes to us.

The theologian John Haught points out that “Faith, at least in the biblical context, is the experience of being grasped by ‘that which is to come.’ Any theology that seeks to reflect such faith accurately, therefore, is required to attribute some kind of efficacy to the future … that comes to meet us, takes hold of us, and makes us new.” “The creation of the world,” Haught says, “is energized not so much from what has passed as from what lies up ahead.” Faith is from and for the future.

There are many promising developments related to good AI, including emerging guidelines and ethical frameworks. But, as Luciano Floridi argues, “reacting to technological innovation is not the best approach. We need to shift from chasing to leading.” We need a clearer sense of where we are going, and “we need to anticipate and steer the ethical development of technological innovation.”

The apocalyptic imagination begins with anticipation.