I recently finished reading John Danaher’s book Automation and Utopia: Human Flourishing in a World without Work (Harvard, 2019) and just sent off a review of it. I’ll share that when it’s published, but in this post I’d like to reflect a bit more on the topic of the first part of the book—the automation of work—in light of an event I attended last week: the Technology Alliance’s Policy Matters Summit on artificial intelligence.

This event brought together policy makers, entrepreneurs, technology experts, and other stakeholders to discuss and learn about the current state of AI, entrepreneurial uses of AI, ethical issues related to AI, and the future of work.

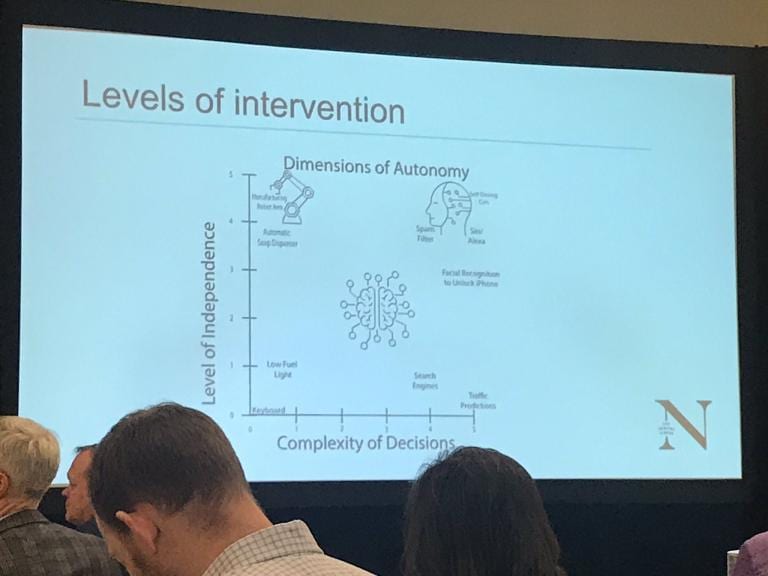

Bethany Edmonds and Oren Etzioni provided helpful frameworks for thinking about automation and AI. Edmonds plots two dimensions of autonomy—level of independence against complexity of decision—which shows the space between a fuel indicator light on the dashboard (low independence and complexity) and a self-driving car (high independence and complexity). AI is increasing in both dimensions, separately and together. Alexa is an example of a current system that is highly autonomous.

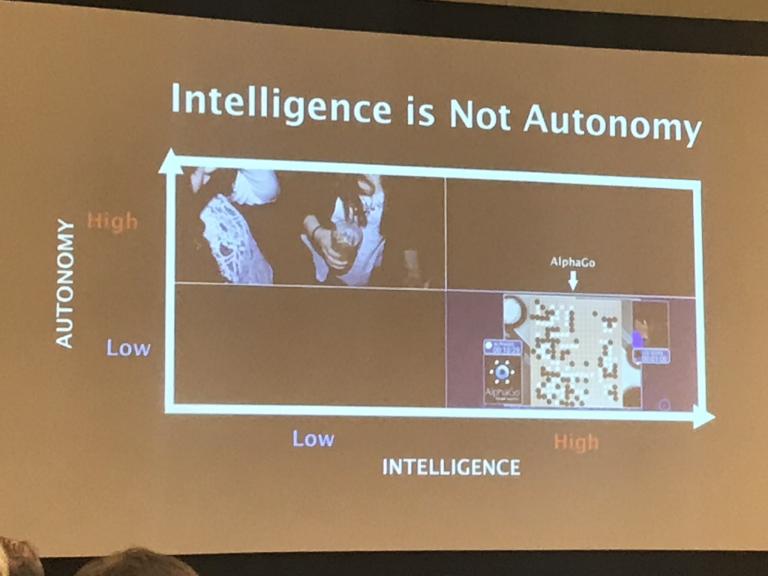

Etzioni—who says if you’re worried about AI just spend some time talking to Alexa—plots autonomy against intelligence, showing the difference between kids getting wasted (high autonomy, low intelligence) and Alpha Go (low autonomy, high intelligence). The most advanced machine learning (ML) systems we have, he concludes, are “narrow idiot savants.”

Edmonds and Etzioni also pointed out the role of humans throughout AI systems, work that touches data, models, and applications. According to Etzioni, 99% of ML is human work.

After Edmonds’s and Etzioni’s talks, a number of applications of ML were exhibited at the summit in industries as diverse as agriculture, healthcare, and the maritime industry. The most compelling business cases for AI are (1) finding something that cannot be done without it and (2) finding something that can be done more cheaply and quickly with it, which often means without people. (A popular business model at the moment seems to be: find some big datasets that aren’t being used fully and apply ML models to decrease expenses or increase revenues.)

All of this leads to a number of questions about the future of work. Here are a few:

- How much work will be automated? According to McKinsey’s analysis, about 40% of activities and 10% of occupations within the next ten years.

- How do we address workforce disruption? Worker upskilling and protection are two obvious areas of need (see, e.g., the recommendations in Washington State’s “Future of Work Task Force 2019 Policy Report”).

- How do we prepare a workforce for the future? Most say more STEM education, but that glosses over the question of transdisciplinary competencies—e.g., the need not just for computer science but for computational thinking across the disciplines, or the need not just for data science but for data literacy as a core competency. Also, there is always an appropriate push for more technology in education, but this often ignores the need for developing models that appropriately balance instructor, student, and artificial agency.

Automation isn’t new: the digital regulation of time, for example, was preceded by the medieval mechanical clock and the ancient water clock. But as Pedro Domingos points out in The Master Algorithm, learning algorithms are “something new under the sun” (xiv). So when AI systems improve to the point at which they need less human support—engineering as well as so-called “ghost” work (i.e., human labor that makes machines work)—and ML replaces more workers, will there be enough work for people? If not, this leads to a fourth question:

- What does human flourishing look like in a world without enough or any work?

This question alludes to the subtitle of Danaher’s book. In the first part of Automation and Work, Danaher argues two propositions. First, all work performed for economic benefit can be automated. We’ve been automating work for centuries and using automated data processing—including complex information processing (forms of AI)—for decades. Over time, we’ve seen automation in agricultural, industrial, financial, legal, and other areas of work. Danaher believes automation will continue advancing until it includes every form of physical, intellectual, and affective work.

Second, Danaher argues that all work done for an economic benefit should be automated. The present conditions of work are structurally bad: they are precarious, inequitable, oppressive, and unsatisfying for most people. The second half of Automation and Utopia explores two alternative futures—possible and practical futures that should be radical improvements on the present.

I critique Danaher’s utopian scenarios in my review, and will return to these in a subsequent post. Here, after further reflecting on his book and the Technology Alliance’s AI summit, I’d like to add two more questions about the future of work:

- Is there work that cannot or should not be automated? I would suggest forms of work that require such things as creativity, care, curiosity, and contemplation—activities worthy of our attention and agency.

- Is it reasonable to expect that we could create a radically different and morally superior economic system? Many agree that drastic reform is necessary. But is it not more practical to address the corruption we know than to create a new system that will include new forms of structural corruption?

Finally, looking beyond the practical concerns of the present and the philosophical horizon of utopian speculation, we should consider how the present deformed world of work is already being transformed into—and preparing us for—a new world of work. We have, already, reformed many of the horrors of work. There are many more to address, and AI should help us with many of these. As economic precarity and oppression are reduced or eradicated as drivers for work, we may come closer to experiencing what Dorothy Sayers’s imagined in her 1942 lecture “Why Work?”: engaging in work as a creative activity pursued for the love of the work itself. So here is one more question:

- What if work is part of what it means to be human? From our evolutionary origins, and in our earliest religious texts, work created our species (technology preceded us) and we were created to work. If we were to extract purposeful work from our lives—work that has instrumental value—would we constrain the conditions for human flourishing?

In the season of Advent, Christians reflect on the past, future, and present coming of Christ. This comprehensive orientation to temporality encourages us to consider not only how the past and present are shaping the future but how the future is transforming everything. In our present moment of significant technological transformation, we should be reflecting deeply on how our past experiences are informing—and, more importantly, how our anticipated future is transforming—the reformation of work in the present.