I was considering writing an April Fools day post, but life’s too short to not blog about epidemiology and epistemology.

As is often the case when I go back to Yale for alumni debates, I ended up in some extended theological debates. In a conversation with one friend, we ended up on thse topic that used to form the core of my about section: what evidence would persuade me that Christianity (or another religion) is true?

I think it’s possible that I’ve set the bar for proof so high that even a true religion couldn’t pass (it’s probably not a good sign when your possible proofs are physically impossible under current models of physics). On the other hand, I certainly don’t want to keep my mind so open that my brain falls out. But talking only in terms of these two extremes isn’t very helpful, so let’s all take a brief biostatistics detour, and see if it helps. (It was extremely useful in my discussion this weekend).

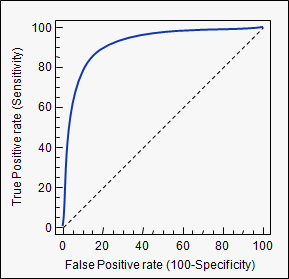

When you’re evaluating a diagnostic test in epidemiology, there are two statistics you really care about:

- Sensitivity – How often does our test successfully return positive results for true cases of the disease? Sensitivity is calculated as (# of sick people correctly identified as sick)/(# of sick people total)

- Specificity – How precise is our test? Can we be confident a positive result really means the person is sick? Specificity is calculated as (# of healthy people with a negative test result)/(# of healthy people total)

You can think of sensitivity as the true positive rate and specificity as the true negative rate. In a perfect world, both statistics would be 100%, but that almost never happens. We have to decide whether we care more about definitely finding every one who’s sick or if there’s a risk in casting our net too widely. The TSA prefers high sensitivity screening, even if it’s a big inconvenience for non-terrorists. When it comes to prostate cancer, we need better specificity, because treatment does more harm than good for a lot of people who test positive.

So what we do is move around our positive test cutoff to what seems like the optimal point. To try and find that point, we draw a ROC curve. (ROC stands for Reciever Operating Characteristic, but trust me, it’s not necessary to know what that means). A ROC curve is a graph of the sensitivity and specificity (actually one minus the specificity) for a range of possible positive test cutoffs. The curves look like this:

Usually you pick the cutoff for evidence that puts you at the ‘elbow’ — the point on the curve closest to the top left corner at (0,1), unless you’re in special circumstances like the TSA or prostate cancer oncologists.

So the question my friend and I were really kicking around is whether, when we consider religious propositions, should we stick to the standard way of thinking or is there a reason we should prioritize specificity over sensitivity or vice versa?

Generally, whenever we’re being asked to do something that clashes terribly with our moral intuitions (like, say, slaughter Isaac) it seems like it makes sense to stick with a high specificity test. We’d rather miss a few genuine commandments and corrections in order to avoid hurting people by mistake. But we don’t want such a low-sensitivity epistemology and standard of proof that we can never embrace a true philosophy and just stay stuck out on our own, trying to construct an entirely new edifice.

Epidemiologists have it easy. They compute sensitivity and specificity by using a ‘gold-standard’ test (like autopsy) as a comparison to the field test. I’m not really sure how to check the calibration of my sensitivity-specificity trade-off when it comes to ethics and philosophy. Any suggestions?