I ended my last post with a cliffhanger. I was writing about the religious dimensions of that critical year of 1968, noting how radically the common expectations that people of that time held about the future differed from what actually occurred. They got it wrong in so many ways, but one trend in particular – and one key event that December – symbolized the gap between vision and reality. So what was the event? Let me end the suspense. I am focusing on a massive breakthrough that laid the foundation of our modern world of computing and IT – of a major part of how we live now, of how we think, perceive, and remember.

At least at first sight, this particular technological issue does not directly concern religion or faith – but it really does. It also shifted expectations about what the coming decades actually would bring. In 1968, most people expected space travel to be a leading edge of future science and growth, and that this coming reality would transform many aspects of life – industry and technology of course, but also human consciousness, and attitudes to the larger universe. So yes, that was a deeply spiritual vision, and some religious thinkers tried to prepare for it. In the 1970s, the Episcopal Church introduced its legendarily spacy Eucharistic Prayer C, with its language about “the vast expanse of interstellar space, galaxies, suns, the planets in their courses, and this fragile earth, our island home” (aka The Star Wars Liturgy).

We were going to space, right, to the final frontier? If you had told intelligent observers in 1968 that humanity would go to the Moon, visit a few times, and then just give up on Space, they would have thought you were insane. The common projection was that after the Moon, the manned Mars mission might be held off till 1986, which seemed impossibly long. And then it didn’t happen. The Via Galactica closed for construction, indefinitely.

Outer Space vanished from our consciousness, or at best remained marginal. It was a matter for fiction, fantasy, and UFO-logy, not for real hard science. But if Outer Space disappeared, Inner Space boomed unimaginably. All the key technological progress and innovation was directed instead to computers and IT, which in 1968 was enjoying an amazing efflorescence.

Computers of various kinds were already central to many businesses and industrial enterprises, and of course, to the military. In June 1968, the program plan for ARPANET was published. ARPANET was the direct precursor of the Internet, and also that year, UCLA was selected as the first node in the system. It went operational in 1969.

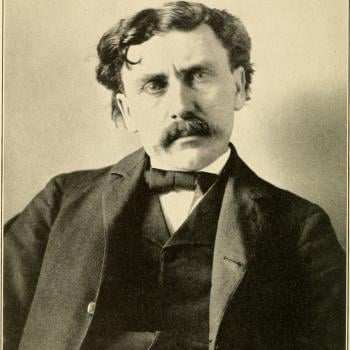

But to return to 1968. One key event of that year occurred on December 9, when Doug Engelbart spoke to the San Francisco meeting of the Association for Computing Machinery / Institute of Electrical and Electronics Engineers, IEEE. What he did that day has gone down as The Mother of All Demos. I quote John Markoff:

In one stunning ninety-minute session, [Engelbart] showed how it was possible to edit text on a display screen, to make hypertext links from one electronic document to another, and to mix text and graphics, and even video and graphics. He also sketched out a vision of an experimental computer network to be called ARPAnet and suggested that within a year he would be able to give the same demonstration remotely to locations across the country. In short, every significant aspect of today’s computing world was revealed in a magnificent hour and a half. There were two things that particularly dazzled the audience:… First, computing had made the leap from number crunching to become a communications and information-retrieval tool. Second, the machine was being used interactively with all its resources appearing to be devoted to a single individual! It was the first time that truly personal computing had been seen.

I repeat that: “Computing had made the leap from number crunching to become a communications and information-retrieval tool.” What could be more obvious to us today? Yet it was shockingly new in December 1968.

Or:

The 90-minute presentation essentially demonstrated almost all the fundamental elements of modern personal computing: windows, hypertext, graphics, efficient navigation and command input, video conferencing, the computer mouse, word processing, dynamic file linking, revision control, and a collaborative real-time editor (collaborative work).

And all this for the first time in a public forum. By the end of his presentation, Engelbart was described as “dealing lightning with both hands.” But he sometimes went into playful mode. In 1967 he had patented his “X-Y Position Indicator for a Display System.” At the great Demo in December 1968, he uttered the fateful words, “I don’t know why we call it a mouse. It started that way, and we never did change it.”

Accounts of the event sometimes suggest that the demo floored or “dazzled” the assembled engineers and techies. Actually, Engelbart received very few questions, mainly because what he was describing was so far removed from their ken, or anything they could imagine. Most clapped politely, and left for the hospitality suite. Only gradually did the implications sink in.

In retrospect, so much of later personal computing dates from that day, and all that would imply. This includes the vast corporate wealth, the economic and social transformations, the restructuring of cities, and all the impacts of social media – nothing less than the reshaping of human consciousness and memory.

Now think of the religious implications of IT, personal computing, and of social media. Think what that means in terms of consciousness, of how we develop and exchange ideas, how we interact and remember. Also what that means in terms of “being present.” Should we not count all this as among the most significant religious developments of the modern age? If so, then we should look back to that pivotal December day in San Francisco. Now that is an anniversary we need to commemorate.

The future suddenly became visible.

There is a terrific account of this pioneering era of high tech in Leslie Berlin, Troublemakers: Silicon Valley’s Coming of Age (2017).

AND AS A LATER UPDATE (December 2018): See the account of the event in Wired magazine. And (also from Wired) this anniversary piece.